In this article, we provide an Introduction to Kubernetes: Why It Matters, What It Is, and How It Works, so beginners and DevOps enthusiasts can understand how it simplifies deploying and managing containerized applications

Before Kubernetes, Docker and Docker Swarm transformed how developers package applications. It allowed them to bundle an application and its dependencies into a single, portable unit called a container. This worked fine for small-scale deployments, but when applications grew to hundreds or thousands of containers the following problems appeared:

- Scalability Issues

- Multi-Cloud Deployments

- Security & Resource Management

- Rolling Updates & Zero Downtime Deployments

This is the problem Kubernetes was created to solve. It acts as the “brain” or orchestrator for your containers, handling the complex task of managing them at scale automatically.

What is Kubernetes?

Kubernetes, often shortened to K8s (K, 8 letters, s), is an open-source platform that automates the deployment, scaling, and management of containerized applications.

- Origin: Developed by Google, inspired by internal systems Borg and Omega.

- Launch: Officially released in 2014.

- CNCF Donation: Donated to the Cloud Native Computing Foundation (CNCF) in 2015, which now maintains it.

- Adoption: Widely used across major cloud providers today.

- Name Meaning: Kubernetes comes from Greek, meaning “helmsman” or “pilot”, symbolizing its role in steering applications.

Think of Kubernetes as an orchestra conductor. Each container is a musician. While you can manage a few musicians yourself, you need a conductor to coordinate an entire orchestra to play a complex symphony. You simply give the conductor the sheet music (your desired configuration), and they ensure every musician plays their part correctly, replacing someone who falls ill and bringing in more players.

Some features of K8s:

Automated Scheduling

Kubernetes uses an intelligent scheduler that decides on which node a Pod should run.

- It looks at resource requests (CPU, memory) made by the Pod.

- Considers node capacity, current load, and affinity/anti-affinity rules.

- Ensures workloads are spread evenly across the cluster for high availability and efficient utilization.

Example: If Node A is already 80% utilized, the scheduler may choose Node B to host the new Pod.

Self-Healing

One of Kubernetes’ strongest features is self-healing, which ensures applications remain available even when failures happen.

K8s achieves this through controllers like ReplicaSet and Deployment:

- If a Pod crashes, the ReplicaSet automatically starts a new Pod to replace it.

- If a Node goes down, Pods running on it are rescheduled on healthy nodes.

- If a container inside a Pod becomes unresponsive, Kubernetes can detect it using liveness probes (health checks) and restart it.

This prevents manual intervention and keeps the system resilient.

Rollouts & Rollbacks

Kubernetes allows gradual updates to applications:

- Supports rolling updates, replacing Pods one by one to minimize downtime.

- If something goes wrong, Kubernetes can rollback to the previous stable version.

Example: If a new version of your app has a bug, a rollback can restore the old version automatically.

Scaling & Load Balancing

- Horizontal Pod Autoscaler (HPA): Increases or decreases the number of Pods based on CPU, memory, or custom metrics.

- Load Balancing: Kubernetes automatically distributes traffic across all available Pods using Services.

Example: If traffic suddenly spikes, HPA adds more Pods to handle the load, and Services spread requests evenly.

Resource Optimization

Kubernetes continuously monitors resource usage:

- Users can define requests (minimum required resources) and limits (maximum resources allowed) for containers.

- The scheduler places Pods accordingly to prevent resource contention.

- Tools like Vertical Pod Autoscaler (VPA) and Cluster Autoscaler optimize utilization further.

This ensures no node is overloaded while maximizing efficiency.

Challenges of Containerized Applications

Managing containerized applications, whether using Docker containers or some other containers runtime, comes with its own set of challenges, such as:

- Scalability: As the number of containers grows, it becomes challenging to scale them effectively.

- Complexity: Managing numerous containers, each with its own role in a larger application, adds complexity.

- Management: Keeping track of and maintaining these containers, ensuring they are updated and running smoothly, requires significant effort.

Kubernetes as a Solution

Now that we have gone through the challenges of using containerized applications, let’s see how Kubernetes handle these challenges.

- Kubernetes steps in as a powerful platform to manage these complexities. It’s an open-source system designed for automating deployment, scaling, and operation of application containers across clusters of hosts.

- It simplifies container management, allowing applications to run efficiently and consistently.

- Kubernetes orchestrates a container’s lifecycle; it decides how and where the containers run, and manages their lifecycle based on the organization’s policies.

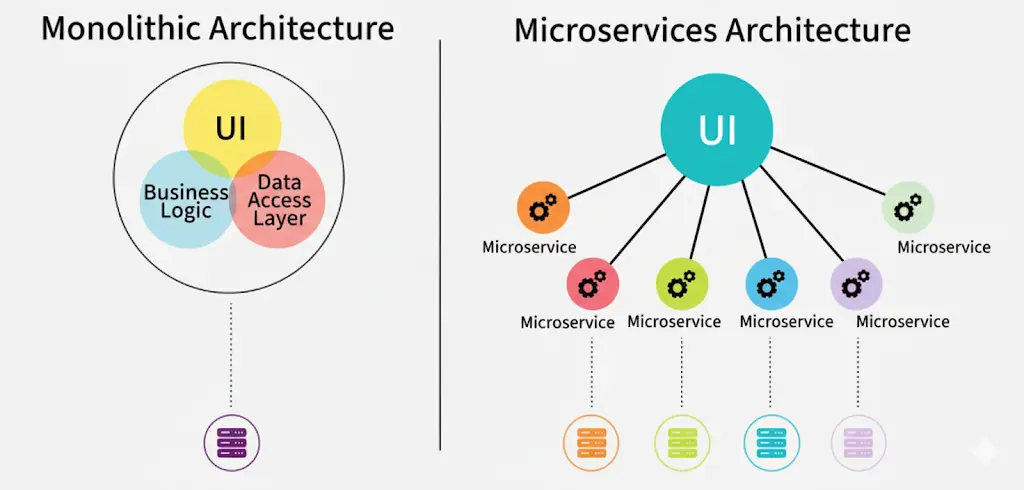

Monolithic Vs Microservices

In the past, applications were built using a monolithic architecture, where everything was interconnected and bundled into one big codebase. This made updates risky for example, if you wanted to change just the payment module in an e-commerce app, you had to redeploy the entire application. A small bug could crash the whole system.

To overcome this, the industry moved toward microservices, where each feature (like payments, search, or notifications) is built and deployed independently. This made applications more flexible and scalable.

But with microservices came a new challenge:instead of running one big app, companies now had to manage hundreds or thousands of small containerized services. Containers solved the packaging problem, but without a way to orchestrate them, things got messy. That’s where Kubernetes came in acting like a smart manager that automates deployment, scaling, and coordination of all those microservices.

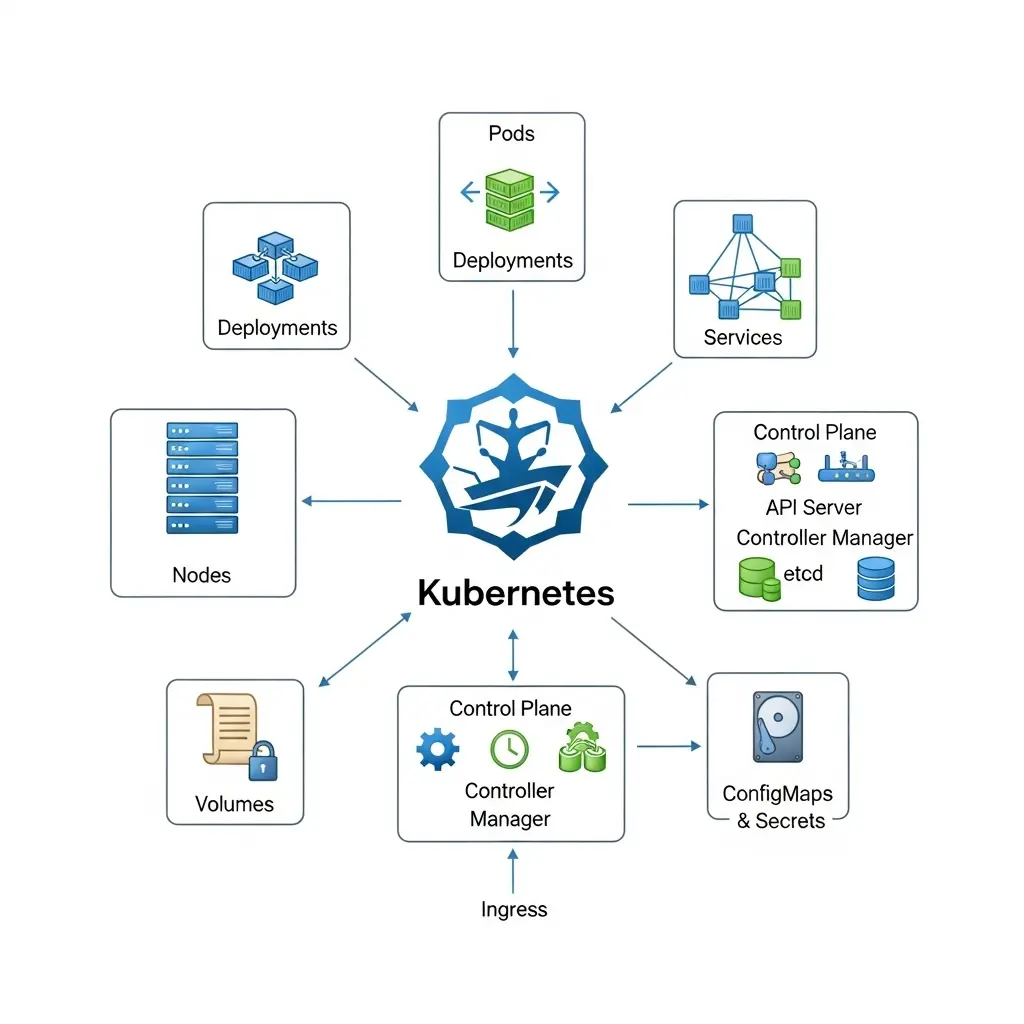

Terminologies in K8s

Think of Kubernetes as a well-organized company where different teams and systems work together to run applications efficiently. Here’s how the key terms fit into this system:

Pod

A Pod is the smallest deployable unit in Kubernetes. It can run one or more containers that are tightly coupled and need to share resources such as network and storage. Containers in the same Pod can talk to each other using localhost. Pods are ephemeral by nature, meaning if one fails, Kubernetes replaces it with a new one, not the same instance.

Node

A Node is a worker machine (either physical or virtual) that runs application workloads. Each Node has the container runtime (like Docker or containerd) for running containers, the Kubelet to interact with the control plane, and Kube-proxy to handle networking. Nodes can scale up or down depending on demand.

Cluster

A cluster is the overall system that brings multiple Nodes together to run containerized workloads. It has two main types of nodes:

- Master Node (Control Plane): Acts like the brain, making decisions about scheduling, scaling, and maintaining the desired state of the system. Components include API Server, Controller Manager, Scheduler, and etcd (cluster database).

- Worker Nodes: Actually run the containers and workloads. Each worker is managed by the control plane and reports its health and status.

Deployment

A Deployment is a higher-level object that defines how Pods should be created and managed. It ensures the right number of Pods are always running and supports rolling updates (gradual updates) and rollbacks (reverting to old versions if something fails).

ReplicaSet

A ReplicaSet makes sure that a specific number of Pod replicas are always running. For example, if you want 3 Pods of a web app, the ReplicaSet ensures exactly 3 Pods are active, starting new ones if some fail. Deployments usually manage ReplicaSets automatically.

Service

A Service is an abstraction that provides a stable IP address and DNS name to Pods. Since Pods are temporary and can change, a Service ensures other components can always reach the application. Services also handle load balancing across multiple Pods.

Ingress

Ingress is an API object that manages external access to applications running in a cluster. It acts as a smart router or reverse proxy, directing incoming HTTP/HTTPS traffic to the correct Service. With Ingress, you can use friendly domain names, TLS/SSL certificates, and path-based routing.

ConfigMap

A ConfigMap is used to store non-confidential configuration data such as environment variables, app settings, or file configurations. Instead of hardcoding these into your application, you separate them in a ConfigMap, making apps easier to manage and update without rebuilding containers.

Secret

A Secret works like a ConfigMap but is specifically designed for sensitive data such as passwords, tokens, and API keys. Secrets are base64 encoded and can be mounted into Pods as files or exposed as environment variables, ensuring security is handled separately from application code.

Persistent Volume (PV)

A Persistent Volume is a cluster-level resource for storage that outlives individual Pods. Since Pods are temporary, data would normally be lost if they restart. PVs provide a way to keep data persistent, useful for databases or applications that require long-term storage.

Kubelet

The Kubelet is an agent running on each Node. It communicates with the control plane to receive instructions and ensures that Pods and containers are running as defined. If a container crashes, the Kubelet restarts it according to specifications.

Kube-proxy

Kube-proxy is a network component that runs on each Node. It maintains network rules so that Pods can communicate with each other, with Services, and with the outside world. It manages things like load balancing traffic across multiple Pods behind a Service.

How Kubernetes Works

Kubernetes works like a smart manager for your applications. Imagine you have multiple containers that need to run together, handle traffic, and stay healthy. Kubernetes makes all of this automatic.

- You define what you want

You tell Kubernetes what your application needs using simple files called manifests (usually in YAML). For example, you specify how many instances (Pods) of your app you want, what resources (CPU/memory) they need, and how they should connect. - Kubernetes schedules the work

Once you define your app, Kubernetes decides where to run it. It looks at all the available nodes in your cluster and picks the best ones based on available resources and rules you set. - It keeps everything running

Kubernetes constantly checks the health of your application. If a container crashes or a node fails, it automatically restarts or moves the containers to healthy nodes. This is called self-healing. - It balances the load

When traffic increases, Kubernetes spreads it across all available Pods so no single instance gets overwhelmed. If needed, it can even add more Pods automatically to handle the extra load. - It updates apps safely

When you deploy a new version of your app, Kubernetes replaces old Pods gradually, so your app stays online. If something goes wrong, it can roll back to the previous version without downtime. - It makes configuration easy

Kubernetes allows you to store settings and secrets separately from your app, so you can change configurations without touching your code.

Benefits of Kubernetes

Kubernetes has become the industry standard for container orchestration because it simplifies application deployment, scaling, and management. Here are the key benefits that make Kubernetes so powerful:

Automated Scheduling

Kubernetes automatically places Pods on the best available Nodes by analyzing resource requirements and constraints. This ensures workloads are efficiently distributed across the cluster without manual intervention.

Self-Healing

Kubernetes constantly monitors the health of applications. If a Pod fails, it is restarted or replaced. If a Node goes down, workloads are rescheduled to healthy Nodes. Health checks like liveness and readiness probes ensure only healthy containers serve traffic.

Seamless Rollouts & Rollbacks

Application updates are handled smoothly through rolling updates, which gradually replace old Pods with new ones without downtime. If issues arise, Kubernetes supports rollbacks to restore the previous stable version quickly.

Scaling & Load Balancing

Kubernetes supports horizontal scaling, automatically adjusting the number of Pods based on CPU, memory, or custom metrics. Built-in load balancing evenly distributes traffic across Pods, ensuring reliability during high demand.

Resource Optimization

Kubernetes manages CPU and memory resources through requests and limits. It prevents resource starvation, ensures fair usage, and works with autoscalers to keep the system efficient and cost-effective.

Portability & Flexibility

Kubernetes can run anywhere—on public cloud, private data centers, or hybrid environments. This provides flexibility to avoid vendor lock-in and deploy applications consistently across multiple infrastructures.

Declarative Configuration

With Kubernetes, you define the desired state of your applications (like number of replicas, versions, and configurations), and Kubernetes ensures the actual state matches it automatically.

Ecosystem & Extensibility

Kubernetes has a large ecosystem with integrations for CI/CD, monitoring, logging, and security tools. It is also extensible, allowing you to build custom controllers and operators tailored to your use cases.

Conclusion

Kubernetes has transformed the way modern applications are deployed and managed. By automating scheduling, scaling, load balancing, and self-healing, it reduces the complexity of running containerized workloads. Its flexibility and portability allow teams to deploy applications consistently across different environments, while its declarative approach ensures that the desired state of applications is always maintained.

Whether you are a developer, DevOps engineer, or IT manager, understanding Kubernetes empowers you to build resilient, scalable, and efficient applications. As the industry increasingly adopts cloud-native technologies, Kubernetes remains an essential tool for managing workloads reliably and effectively.