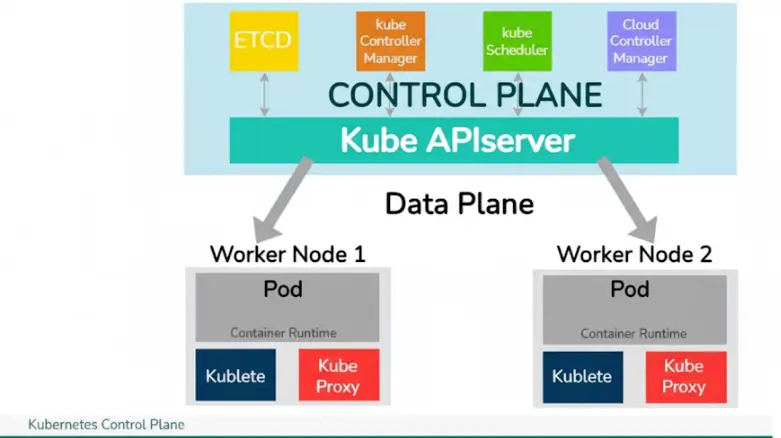

Kubernetes, an open-source container orchestration platform, has turned out to be the cornerstone of modern software deployment and management. The core of Kubernetes lies in the Control Plane, a set of components that work collectively to maintain the desired state of your cluster. In this article, we will discuss the components of the Kubernetes Control Plane in detail, their roles, and how they collaborate to ensure the seamless operation of containerized applications.

What is Kubernetes Control Plane?

The Kubernetes Control Plane, often called the “Master” or “Control Node,” is a set of components that collectively manage the state of a Kubernetes cluster. It acts as the brain of the cluster, making worldwide decisions about the cluster (for example, scheduling), as well as detecting and responding to cluster events (like starting a new pod while a deployment’s replicas field is unsatisfied)

Components of the Kubernetes Control Plane

There are mainly four main Control Plane components listed as follows:

- Kube-API Server

- Kube-Scheduler

- Controller Manager

- Etcd Database

1. Kube-API Server

The Kube-API Server acts as the primary interface for cluster communication. It exposes the Kubernetes API, enabling users and external components to interact with the cluster efficiently. The server validates incoming requests, processes them, and updates the corresponding object state in etcd, Kubernetes’ central data store. Furthermore, it handles authentication, authorization, and admission control, ensuring secure and compliant operations. By serving as the communication hub, the Kube-API Server simplifies orchestration and management of containerized applications.

Key Points:

- Central component for cluster management.

- Exposes the Kubernetes API for communication.

- Validates requests and updates objects in etcd.

2. etcd

etcd is a distributed, highly available key-value store that maintains the cluster’s configuration and state. It uses the Raft consensus algorithm to ensure consistency across all nodes. etcd is essential for service discovery and dynamic configuration, allowing clients to watch for changes in real time. Its reliability and performance make it a critical component for maintaining Kubernetes cluster integrity.

Key Points:

- Stores cluster configuration data reliably.

- Ensures consistency with Raft consensus.

- Enables dynamic, responsive applications via watch functionality.

3. Controller Manager

The Controller Manager runs controller processes that monitor and manage the cluster state. By constantly comparing the desired state (defined in configuration files) with the actual state, it automatically handles tasks like node management, replication, endpoint discovery, and namespace management. This automation reduces manual intervention and ensures the cluster operates efficiently and consistently.

Key Points:

- Observes the cluster state through the API server.

- Ensures actual state matches desired state.

- Automates tasks like replication, node, and namespace management.

4. Kube Scheduler

The Kube Scheduler is responsible for assigning newly created pods to suitable cluster nodes. It evaluates node resources against pod requirements, considering policies like node affinity, taints, and tolerations. By intelligently distributing workloads, the scheduler optimizes resource usage and maintains cluster performance. Administrators can also customize scheduling policies to meet specific application needs.

Key Points:

- Assigns pods to nodes based on resource availability and constraints.

- Monitors node utilization and schedules pods accordingly.

- Enhances efficiency by distributing workloads intelligently.

Together, these components work cohesively to maintain the desired state of the cluster, handle events, and ensure applications run exactly as specified in deployment configurations. The Control Plane is often distributed across multiple nodes for redundancy and fault tolerance, and all inter-component communication is secured to protect cluster integrity.

Control Plane Workflow Of Kubernetes

User Interaction

- Users or external systems interact with the Kubernetes cluster through the API server.

- API requests can consist of action like deploying packages, scaling, or updating configurations

- They send requests to the Kubernetes API server, using tools like kubectl or through custom applications.

API Server Processing

- API Server is a central component which process API request.

- The API server process incoming requests, authenticates the user, and validates the request.

- Once verified, the API server updates the cluster’s desired state in etcd.

Etcd Update

- Changes to the cluster store are saved in etcd, ensuring a steady and reliable record of the cluster’s configuration.

- It includes information about Kubernetes nodes, pods, services, and more.

- The API server updates the relevant data in etcd, make sure that the desired state reflects the changes requested by user.

- The use of etcd as a distributed datastore adds resilience to the Kubernetes Control Plane.

Controller Manager Action

- The Controller Manager constantly watches the state of the cluster in etcd.

- When difference between the current and desired state are detected, the Controller Manager triggers the appropriate action to reconcile them.

For Example

- Replication Controller: It ensures the specific number of replicas of a pod is maintained.

- Node Controller: Node Controller manages nodes, addressing node failure, etc.

- Endpoint Controller: It Populates Endpoints of objects, and maintain the mapping of Services to Pods.

Scheduler Decision

- The Scheduler, based on resource availability and constraints, decides where to deploy a new pod.

- The Scheduler is responsible for assigning pods to nodes depending on various factors like resource availability, affinity/anti-affinity rules, and constraints.

- It updates the API server with the decision, and the Controller Manager ensures the cluster converges to the desired state.

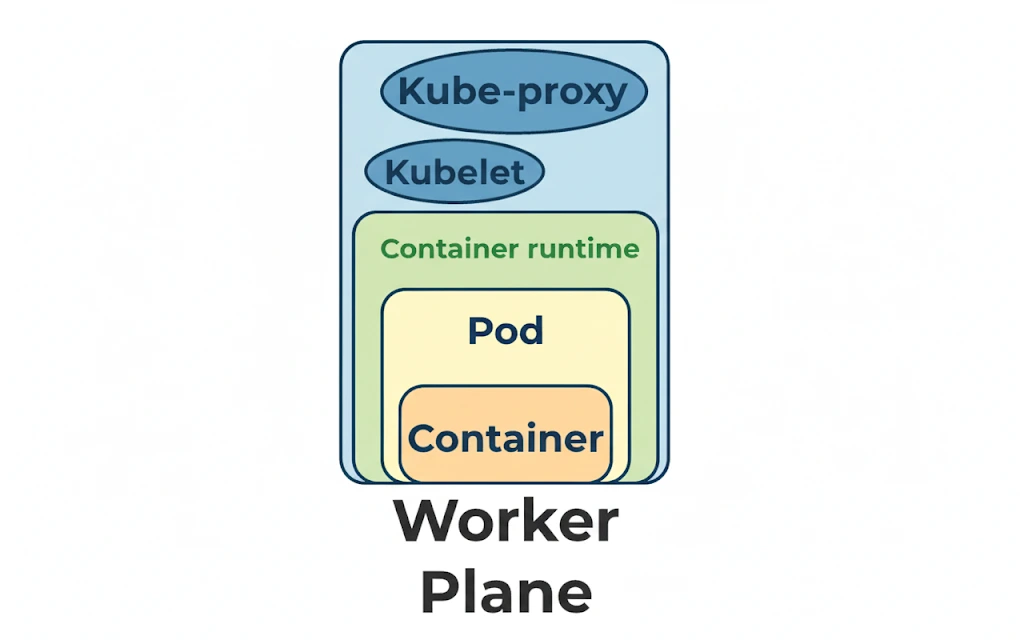

Node Components

Each node in the cluster runs several crucial components that enable the execution and management of containers. Here’s a brief overview of these key node components: kubelet, kube-proxy, and the container runtime.

- kubelet: The kubelet serves as the cluster’s “node agent,” supervising each and every node. By communicating with the Kubernetes API server to handle pod lifecycle events like creation, deletion, and updates, one can be sure that containers work according to schedule.

- kube-proxy: A network proxy called Kube-proxy is set up on every node and enables services in a cluster connect to one another. It handles load balancing, finding services, and network routing, ensuring that traffic gets to appropriate pods.

- Container runtime: This is the software responsible for running containers within pods. Common container runtimes in Kubernetes include Docker, containerd, and CRI-O. The container runtime is responsible for pulling container images, creating containers, managing their lifecycle, and providing isolation between containers on the node.

Addons

Addons are supplementary components that boost the cluster’s the ability through providing more features or services. Here’s an overview of some common Kubernetes variations:

- DNS: Provides DNS-based service discovery for Kubernetes services.

- Dashboard: Web-based user interfaces to handle and monitoring over clusters running Kubernetes.

- Ingress Controller: Controls the Kubernetes cluster’s external service access.

- Metrics Server: Collects data regarding what the cluster’s nodes and pods utilize the available resources.

- Logging: Logs from system components and containers combine and archived.

- Monitoring: Provides Kubernetes cluster monitoring and alerting characteristics.

- Networking (CNI): Controls the rules and configuration for pods and services’ networking.

- Storage (CSI): Integrates external storage systems with Kubernetes for persistent storage.

Best Practices for the Kubernetes Control Plane

- High Availability (HA): Install the controller manager, scheduler, etcd, API server, and additional control plane components across multiple nodes in a highly accessible setup. By making sure essential parts continue to operate and be accessible even in the event of a node failure, this enhances the Kubernetes cluster’s general resilience.

- Secure Access Control: Create robust authorization and authentication processes for controlling whoever has access to the Kubernetes API server and control plane components. Limit entitlements based on roles and responsibilities through defining granular permissions for users and service accounts with Role-Based Access Control (RBAC). Additionally, enforce network policies to restrict access to the control plane from unauthorized sources and use Transport Layer Security (TLS) for secure communication.

- Regular Updates and Maintenance: Update the components of the control plane with the most recent security fixes and patches to minimize vulnerabilities and guarantee the reliability of the Kubernetes cluster. Schedule routine maintenance to carry out upgrades, check the condition of the system, and make configuration adjustments. When performing maintenance, reduce downtime and disturbances by utilizing canary deployments and rolling updates.

Conclusion

The Kubernetes Control Plane is the brain and nervous system of your container orchestration surroundings. Understanding its components and workflow is essential for both administrators and developers operating with Kubernetes. By delving into the intricacies of the Control Plane, you get benefit insights into how Kubernetes manages containerized workloads, keeps consistency, and guarantees the scalability and resilience of your application in a dynamic and changing surroundings.