What is ECS?

Amazon Elastic Container Service (ECS) is a fully managed container orchestration service from AWS. It helps you run and manage Docker containers on a cluster of EC2 instances without handling the infrastructure yourself.

Think of ECS as a conductor leading an orchestra. The conductor doesn’t play any instrument but keeps everything in sync. ECS works the same way. It doesn’t run your applications directly but ensures your containers deploy, scale, and run smoothly.

ECS offers two launch types. With Fargate, AWS manages the infrastructure for you. With EC2, you control the servers that run your containers. ECS also connects easily with Elastic Load Balancing, Auto Scaling, and Amazon VPC. These integrations help you build reliable and scalable applications.

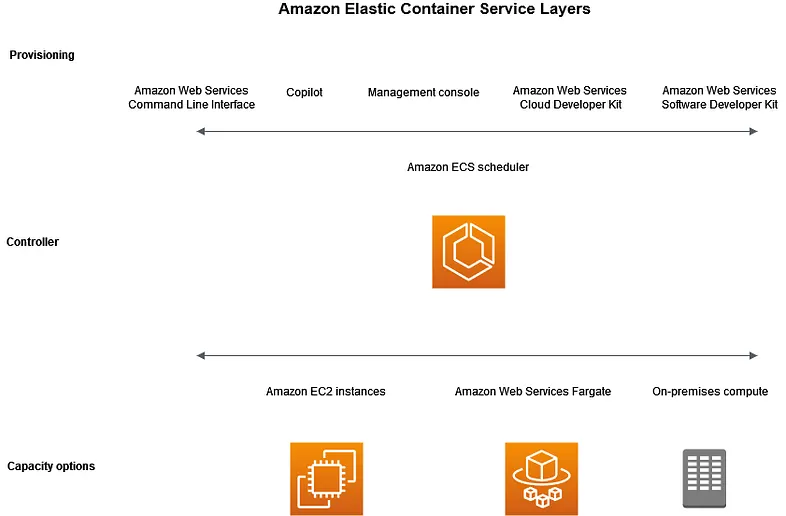

There are three layers in Amazon ECS:

Provisioning — The tools that you can use to interface with the scheduler to deploy and manage your applications and containers

Capacity — The infrastructure where your containers run

Controller — Deploy and manage your applications that run on the containers, it is the software that manages your applications.

Amazon ECS capacity is the infrastructure where your containers run. The following is an overview of the capacity options:

- Amazon EC2 instances in the AWS cloud You choose the instance type, and the number of instances, and manage the capacity.

- Serverless (AWS Fargate) in the AWS cloud Fargate is a serverless, pay-as-you-go compute engine. With Fargate you don’t need to manage servers, handle capacity planning, or isolate container workloads for security.

- On-premises virtual machines (VM) or servers Amazon ECS Anywhere provides support for registering an external instance such as an on-premises server or virtual machine (VM), to your Amazon ECS cluster.

ECS Application lifecycle

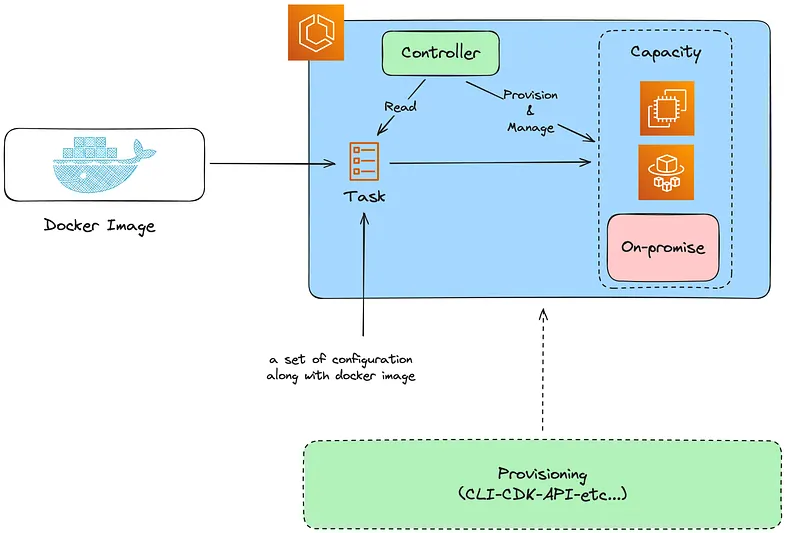

You must architect your applications so that they can run on containers. A container is a standardized unit of software development that holds everything that your software application requires to run. This includes relevant code, runtime, system tools, and system libraries. Containers are created from a read-only template that’s called an image. Images are typically built from a Dockerfile. A Dockerfile is a plaintext file that contains the instructions for building a container. After they’re built, these images are stored in a registry such as Amazon ECR where they can be downloaded from.

After you create and store your image, you create an Amazon ECS task definition. A task definition is a blueprint for your application. It is a text file in JSON format that describes the parameters and one or more containers that form your application. For example, you can use it to specify the image and parameters for the operating system, which containers to use, which ports to open for your application, and what data volumes to use with the containers in the task. The specific parameters available for your task definition depend on the needs of your specific application.

ECS Clusters

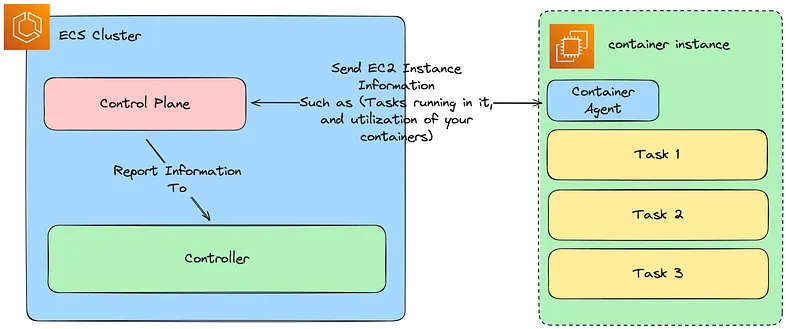

A cluster is a logical grouping of tasks or services. It runs on the capacity infrastructure that you register to the cluster.

ECS Tasks and Services

A task is the running instance of a task definition inside a cluster. You can run a task on its own or as part of a service.

An ECS service helps you maintain the desired number of tasks at all times. For example, if one of your tasks fails or stops, the ECS service scheduler quickly launches another task using the same definition. As a result, your cluster always meets the required task count.

ECS Container Agent

The container agent runs on every container instance within an ECS cluster. It reports the current running tasks and resource usage back to ECS. In addition, it starts and stops tasks whenever ECS sends a request.

After you deploy the task or service, you can use any of the following tools to monitor your deployment and application:

- CloudWatch

- Runtime Monitoring

Architect your solution for Amazon ECS

Before you Provision your ECS Cluster, you need to make decisions about:

- Capacity

- Networking

- Monitoring

Capacity

The capacity is the infrastructure where your containers run. The following are the options:

- Amazon EC2 instances

- Serverless (AWS Fargate)

- On-premises virtual machines (VM) or servers

You specify the infrastructure when you create a cluster (Known as Cluster Default Infrastructure). You also specify the infrastructure type when you register a task definition (Task Definition Infrastructure level, if not specified it uses the cluster default infrastructure). The task definition refers to the infrastructure as the “launch type”.

Fargate

Fargate is a serverless, pay-as-you-go compute engine that lets you focus on building applications without managing servers. When you choose Fargate, you don’t need to manage an EC2 infrastructure. All you need to do is build your container image and define which cluster you want to run your applications on.

You have more control with Fargate than EC2 because you select the exact CPU and memory that your application needs. Fargate handles scaling out your capacity, so you don’t need to worry about spikes in traffic. This means that there is less operational effort with Fargate.

Fargate is suitable for the following workloads:

- Large workloads that require low operational overhead

- Small workloads that have an occasional burst

- Tiny workloads

- Batch workloads

The following diagram shows the general architecture.

EC2

The EC2 launch type is suitable for large workloads that must be price optimized.

When considering how to model task definitions and services using the EC2 launch type, it is recommended that you consider what processes must run together and how you might go about scaling each component.

As an example, suppose that an application consists of the following components:

- A frontend service that displays information on a webpage

- A backend service that provides APIs for the frontend service

- A data store

For this example, create task definitions that group the containers that are used for a common purpose together. Separate the different components into multiple and separate task definitions.

The following example cluster has three container instances that are running three front-end service containers, two backend service containers, and one data store service container.

You can group related containers in a task definition, such as linked containers that must be run together. For example, add a log streaming container to your front-end service and include it in the same task definition. After you have your task definitions, you can create services from them to maintain the availability of your desired tasks.

In your services, you can associate containers with Elastic Load Balancing load balancers.

When your application requirements change, you can update your services to scale the number of desired tasks up or down. Or, you can update your services to deploy newer versions of the containers in your tasks.

External (Amazon ECS Anywhere)

Amazon ECS Anywhere provides support for registering an external instance such as an on-premises server or virtual machine (VM), to your Amazon ECS cluster. External instances are optimized for running applications that generate outbound traffic or process data. If your application requires inbound traffic, the lack of Elastic Load Balancing support makes running these workloads less efficient. Amazon ECS added a new EXTERNAL launch type that you can use to create services or run tasks on your external instances.

The following provides a high-level system architecture overview of Amazon ECS Anywhere. Your on-premises server has both the Amazon ECS agent and the SSM agent installed.

Networking

AWS resources are created in subnets. When you use EC2 instances, Amazon ECS launches the instances in the subnet that you specify when you create a cluster. Your tasks run in the instance subnet. For Fargate or on-premises virtual machines, you specify the subnet when you run a task or create a service.

Depending on your application, the subnet can be private or public.

You can have your application connect to the internet by using one of the following methods:

- A public subnet with an internet gateway

Use public subnets when you have public applications that require large amounts of bandwidth or minimal latency. Applicable scenarios include video streaming and gaming services. - A private subnet with a NAT gateway

Use private subnets when you want to protect your containers from direct external access. Applicable scenarios include payment processing systems or containers storing user data and passwords. - AWS PrivateLink

Use AWS PrivateLink to have private connectivity between VPCs, AWS services, and your on-premises networks without exposing your traffic to the public internet.

Monitoring

Monitoring is an important part of maintaining the reliability, availability, and performance of Amazon ECS and your AWS solutions. You should collect monitoring data from all of the parts of your AWS solution so that you can more easily debug a multi-point failure if one occurs. Before you start monitoring Amazon ECS, create a monitoring plan that includes answers to the following questions:

- What are your monitoring goals?

- What resources will you monitor?

- How often will you monitor these resources?

- What monitoring tools will you use?

- Who will perform the monitoring tasks?

- Who should be notified when something goes wrong?

The metrics made available depend on the launch type of the tasks and services in your clusters.

- If you are using the Fargate launch type for your services, then CPU and memory utilization metrics are provided to assist in the monitoring of your services.

- For the Amazon EC2 launch type, you own and need to monitor the EC2 instances that make your underlying infrastructure. Additional CPU and memory reservation and utilization metrics are made available at the cluster, service, and task.

To establish a baseline you should, at a minimum, monitor the following items:

- The CPU and memory reservation and utilization metrics for your Amazon ECS clusters

- The CPU and memory utilization metrics for your Amazon ECS services

You can use the following automated monitoring tools to watch Amazon ECS and report when something is wrong:

- Amazon CloudWatch alarms — Watch a single metric over a time period that you specify, and perform one or more actions based on the value of the metric relative to a given threshold over a number of time periods. The action is a notification sent to an Amazon Simple Notification Service (Amazon SNS) topic or Amazon EC2 Auto Scaling policy.

For services with tasks that use the Fargate launch type, you can use CloudWatch alarms to scale in and scale out the tasks in your service based on CloudWatch metrics, such as CPU and memory utilization - Amazon CloudWatch Logs — Monitor, store, and access the log files from the containers in your Amazon ECS tasks by specifying the

awslogslog driver in your task definitions. - Amazon CloudWatch Events — Match events and route them to one or more target functions or streams to make changes, capture state information, and take corrective action.

- Container Insights — Collect, aggregate, and summarize metrics and logs from your containerized applications and microservices. Container Insights collects data as performance log events using embedded metric format. These performance log events are entries that use a structured JSON schema that allows high-cardinality data to be ingested and stored at scale. From this data, CloudWatch creates aggregated metrics at the cluster, task, and service level as CloudWatch metrics.

- AWS CloudTrail log monitoring — Share log files between accounts, monitor CloudTrail log files in real time by sending them to CloudWatch Logs, write log processing applications in Java, and validate that your log files have not changed after delivery by CloudTrail.

- Runtime Monitoring — Detect threats for clusters and containers within your AWS environment. Runtime Monitoring uses a GuardDuty security agent that adds runtime visibility into individual Amazon ECS workloads, for example, file access, process execution, and network connections.

Automate responses to Amazon ECS errors using EventBridge

Using Amazon EventBridge, you can automate your AWS services and respond automatically to system events such as application availability issues or resource changes. Events from AWS services are delivered to EventBridge in near real time. You can write simple rules to indicate which events are of interest to you and what automated actions to take when an event matches a rule.

You can use Amazon ECS events for EventBridge to receive near real-time notifications regarding the current state of your Amazon ECS clusters. If your tasks are using the EC2 launch type, you can see the state of both the container instances and the current state of all tasks running on those container instances.

ECS vs. EKS: A Comparative Analysis

Both ECS (Elastic Container Service) and EKS (Elastic Kubernetes Service) are Amazon’s offerings in the realm of container orchestration. While they serve the same purpose, they differ significantly in their architectures, Kubernetes support, scaling mechanisms, flexibility, and community support.

Architecture

ECS follows a centralized architecture where a single control plane manages the scheduling of containers on EC2 instances. On the other hand, EKS leverages a distributed architecture, with the Kubernetes control plane spread across multiple EC2 instances.

Kubernetes Support

EKS is essentially a fully managed Kubernetes service. It allows you to run your Kubernetes workloads on AWS without worrying about managing the Kubernetes control plane. ECS, however, uses its own orchestration engine and lacks native Kubernetes support.

Scaling

With EKS, your Kubernetes cluster can automatically scale based on demand. In contrast, ECS necessitates manual configuration of scaling policies for your tasks and services.

Flexibility

EKS offers greater flexibility than ECS as it allows you to customize and configure Kubernetes to meet your specific requirements. ECS, while effective, offers relatively less flexibility in terms of container orchestration options.

Community

Kubernetes boasts a large and vibrant open-source community, which means EKS benefits from extensive community-driven development and support. ECS, however, has a smaller community and is primarily driven by AWS itself.

Getting Hands-on: Setting up Nginx on ECS

Now that we’ve understood the basics of ECS and how it compares with EKS, let’s get our hands dirty and set up Nginx on ECS.

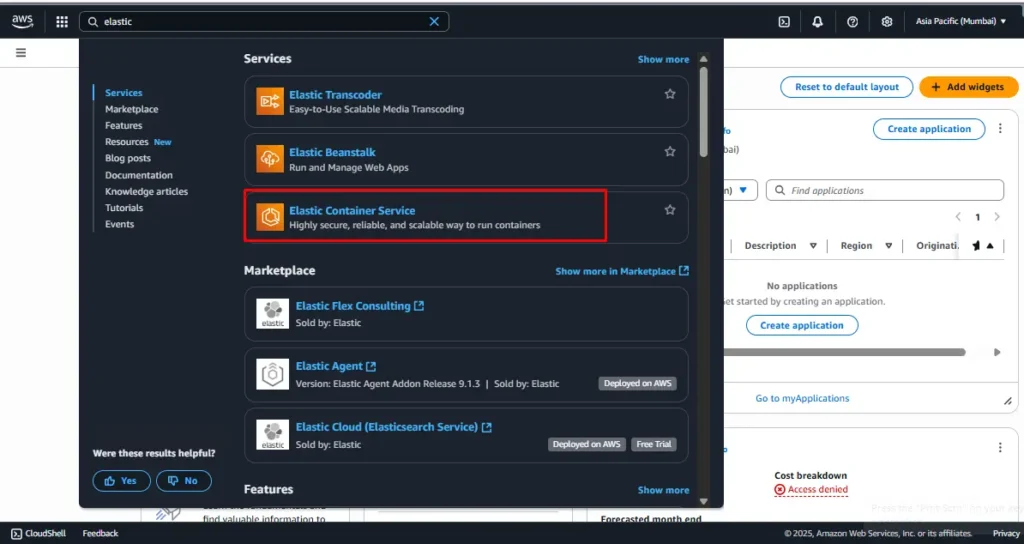

Step 1: Create a Cluster

- Navigate to the AWS Management Console and open the Amazon ECS console.

- Click on ‘Create Cluster’, and select the ‘AWS Fargate’ option.

2. Fill in the necessary details, including cluster name, instance type, and number of instances.

3. Click on ‘Create’.

Step 2: Create a Task Definition

- In the left-side navigation pane, choose ‘Task Definitions’, then ‘Create new Task Definition’.

2. Select ‘AWS Fargate’ launch type compatibility and click ‘Next step’.

3. Specify the task name, task role, network mode, and task memory and CPU values.

4. Under ‘Container Definitions’, click ‘Add container’ and provide the necessary details, including image, memory limits, and port mappings.

5. Click on ‘Add’, then ‘Create’.

Step 3: Create a Service

- Return to your cluster page and click on ‘Create’ under ‘Services’.

- Select the task definition you created earlier, specify the service name, and choose the number of tasks to run.

3. Configure network settings and load balancing as per your requirements.

4. Click on ‘Next step’, review your settings, and click ‘Create’.

And there you have it! You’ve successfully set up Nginx on ECS. This hands-on experience should give you a better understanding of how ECS operates and how it can be used to manage and orchestrate containerized applications.

Benifits of ECS

Support for hybrid workloads (ECS Anywhere).

Fully managed container orchestration.

Tight integration with AWS ecosystem (VPC, ELB, IAM, CloudWatch, etc.).

Flexible launch types: EC2 or Fargate.

High scalability to run thousands of containers.

Cost optimization with pay-as-you-go model.

Built-in service discovery and load balancing.

Automatic task recovery and health management.

Secure by default with IAM roles and policies.

Simplified deployment and rolling updates.

Conclusion

As we delve deeper into our DevOps journey, we continue to explore and master powerful tools like AWS ECS. With its robust integration capabilities, ECS provides a versatile platform to efficiently manage Docker containers on the AWS cloud.

Despite being somewhat overshadowed by the popularity of Kubernetes and EKS, ECS still holds its ground as a reliable, AWS-native container orchestration service. Its unique features and advantages make it an excellent choice for certain use cases.