Kubernetes services are an essential component of any Kubernetes deployment. They allow you to expose your application to other parts of your cluster and the outside world by providing a stable IP and DNS name. With Kubernetes services, you can easily scale your application, perform load balancing, and even define routing based on a set of rules.

This blog focuses on Ingress, a Kubernetes tool for managing access to a cluster’s services.

What is Kubernetes Ingress?

The literal meaning: Ingress refers to the act of entering.

It is the same in the Kubernetes world as well. Ingress means the traffic that enters the cluster and egress is the traffic that exits the cluster.

Ingress is a native Kubernetes resource like pods, deployments, etc. Using ingress, you can maintain the DNS routing configurations. The ingress controller does the actual routing by reading the routing rules from ingress objects stored in etcd.

Let’s understand ingress with a high-level example.

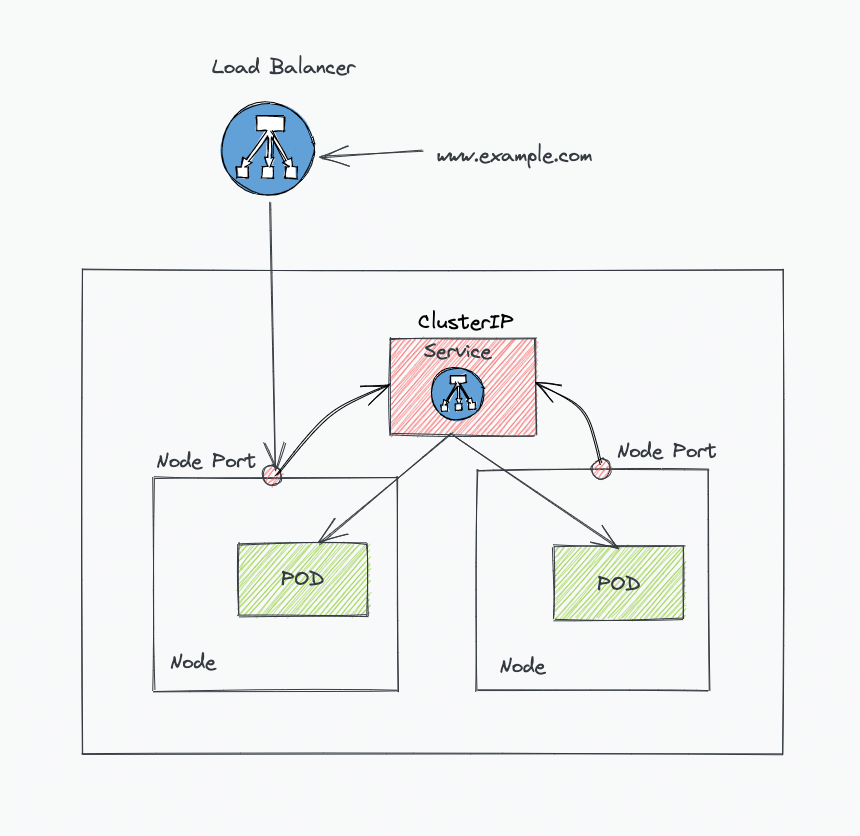

Without Kubernetes ingress, to expose an application to the outside world, you will add a service Type Loadbalancer to the deployments. Here is how it looks. (I have shown the nodePort just to show the traffic flow)

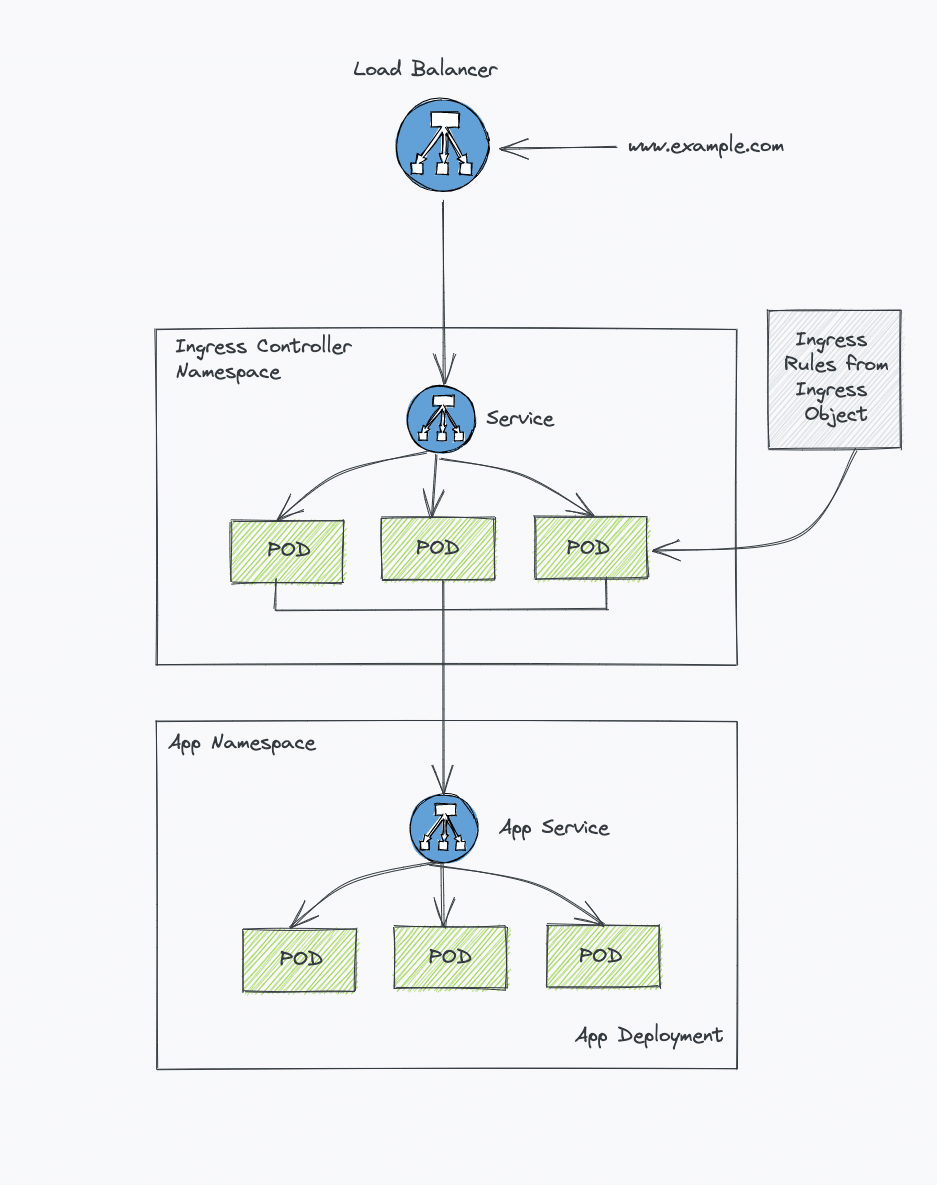

In the same implementation, with ingress, there is a reverse proxy layer (Ingress controller implementation) between the load balancer and the kubernetes service endpoint.

Here is a very high-level view of ingress implementation. In later sections, we will see a detailed architecture covering all the key concepts.

Before Kubernetes Ingress

Before Kubernetes Ingress existed, people had to set up their own ways to send traffic from the outside world into their Kubernetes cluster.

One common way was to deploy Nginx or HAProxy inside Kubernetes and expose it using a LoadBalancer service.

This LoadBalancer received all the external traffic and sent it to the correct internal service.

To control how the traffic was routed, people used a ConfigMap.

The ConfigMap contained the routing rules (for example, which domain should go to which service).

Whenever someone added a new domain or changed a route, they had to update the ConfigMap and then reload or redeploy the Nginx/HAProxy pods so the new rules took effect.

Then came Kubernetes Ingress, which simplified this.

Instead of using a ConfigMap, routing rules are now stored as Ingress objects—a built-in Kubernetes resource.

The Ingress Controller (which is usually a special version of Nginx, HAProxy, or another tool) reads these Ingress rules automatically and updates the routes dynamically—no manual restarts needed.

Some people also used tools like Consul for service discovery, which could update Nginx or HAProxy routes automatically without downtime—basically doing what Ingress does today.

In OpenShift, this idea was already used early on.

OpenShift had a built-in Router (based on HAProxy) that worked like an Ingress Controller.

You just needed to create a Router configuration (a YAML object), and OpenShift handled the routing for you—just like Kubernetes Ingress does now.

How Does Kubernetes Ingress work?

If you are a beginner and trying to understand ingress, there is possible confusion on how it works.

For example, You might ask, hey, I created the ingress rules, but I am not sure how to map it to a domain name or route the external traffic to internal deployments.

You need to be very clear about two key concepts to understand that.

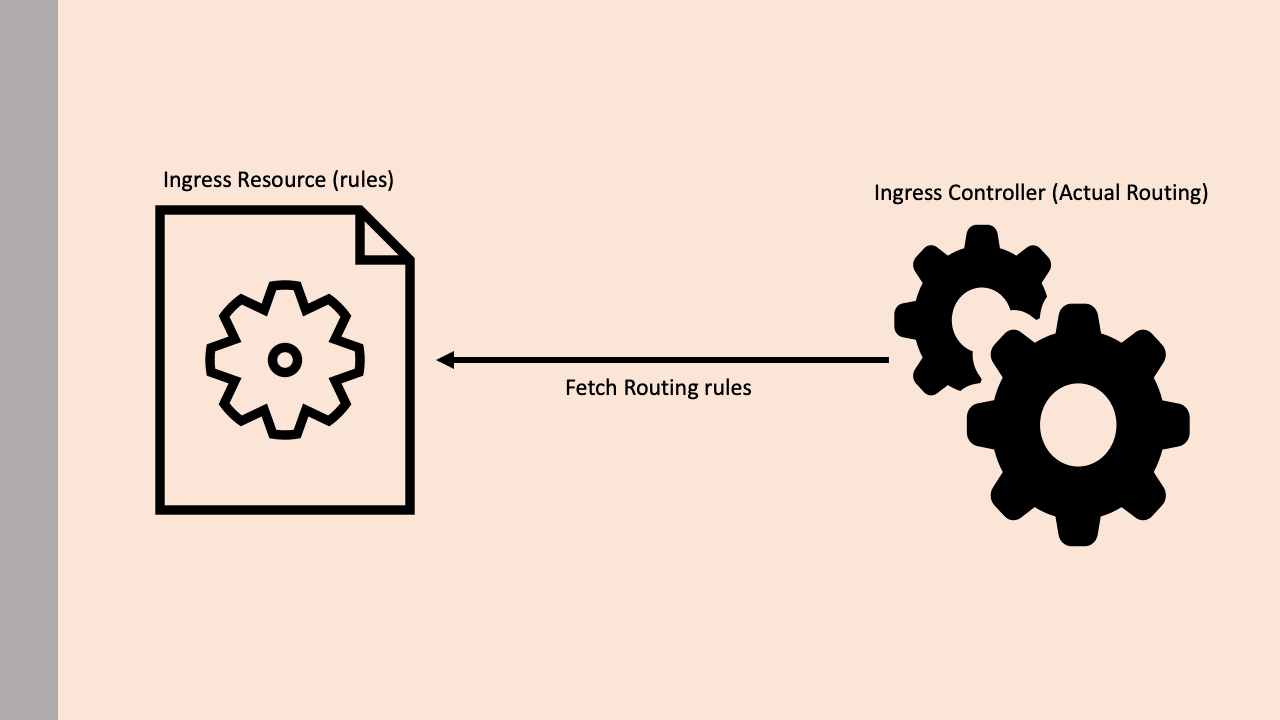

- Kubernetes Ingress Resource: Kubernetes ingress resource is responsible for storing DNS routing rules in the cluster.

- Kubernetes Ingress Controller: Kubernetes ingress controllers (Nginx/HAProxy etc.) are responsible for routing by accessing the DNS rules applied through ingress resources.

Let’s look at both the ingress resource and ingress controller in detail.

Kubernetes Ingress Resource

A Kubernetes Ingress Resource is a built-in object in Kubernetes. It defines how external traffic from the internet reaches services inside the cluster.

Think of it as a set of routing rules that tell Kubernetes where to send incoming requests.

For example:

“If someone visits this domain name, forward them to this service.”

The Ingress resource itself only stores the rules.

It doesn’t route traffic directly.

To make those rules work, you must install an Ingress Controller such as NGINX or HAProxy.

The controller reads the Ingress rules and routes the traffic to the correct service.

Here’s a simple example:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: test-ingress

namespace: dev

spec:

rules:

- host: test.apps.example.com

http:

paths:

- backend:

serviceName: hello-service

servicePort: 80

This rule says:

Traffic to test.apps.example.com goes to the hello-service in the dev namespace on port 80.

You can add more routes for different paths, like /api or /login.

You can also include TLS/SSL settings to secure traffic with HTTPS.

Kubernetes Ingress Controller

An Ingress Controller is not built into Kubernetes by default.

This means if you want to use Ingress rules, you first need to install an Ingress Controller in your cluster.

There are many types of Ingress Controllers available — some are open-source (like NGINX, HAProxy, Traefik), and others are enterprise versions (like AWS ALB Ingress Controller or GKE Ingress).

An Ingress Controller works like a reverse proxy server inside your cluster.

In Kubernetes, it is usually set up as a Deployment, and it’s exposed to the outside world using a LoadBalancer service.

So, external traffic first hits the LoadBalancer, then goes to the Ingress Controller, and finally reaches the correct internal service.

You can also have multiple Ingress Controllers in one cluster.

Each controller connects to its own LoadBalancer and is identified by a unique name called an Ingress Class (set through an annotation).

This helps Kubernetes know which Ingress Controller should handle which Ingress rules.

How Does an Ingress Controller Work?

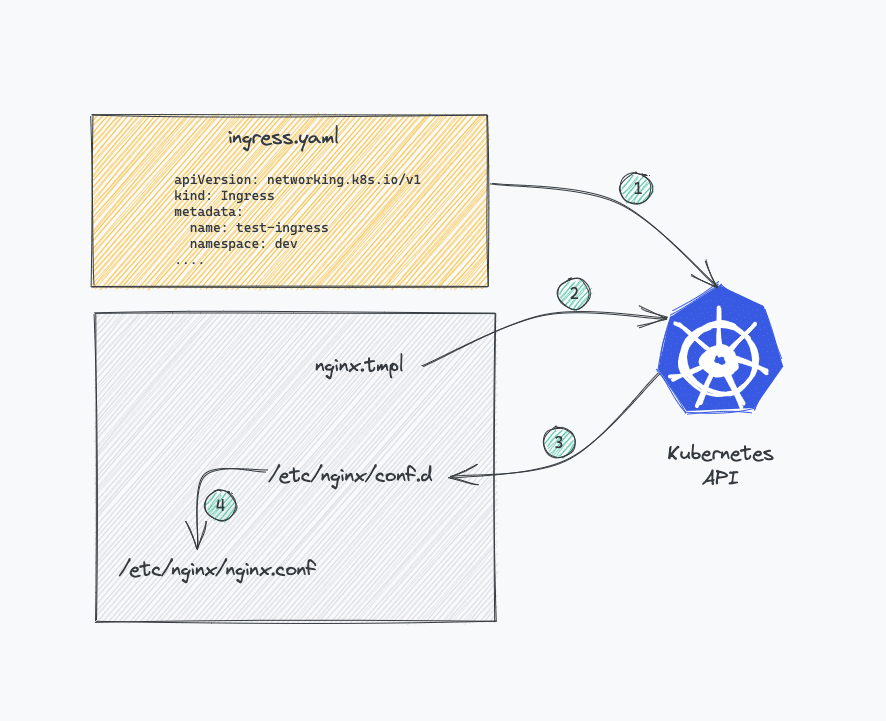

Nginx is one of the widely used ingress controllers.

So let’s take an example of Nginx ingress controller implementation to understand how it works.

- The

nginx.conffile inside the Nginx controller pod is a lua template that can talk to Kubernetes ingress API and get the latest values for traffic routing in real-time. Here is the template file. - The Nginx controller talks to Kubernetes ingress API to check if there is any rule created for traffic routing.

- If it finds any ingress rules, the Nginx controller generates a routing configuration inside

/etc/nginx/conf.dlocation inside each nginx pod. - For each ingress resource you create, Nginx generates a configuration inside

/etc/nginx/conf.dlocation. - The main

/etc/nginx/nginx.conffile contains all the configurations frometc/nginx/conf.d. - If you update the ingress object with new configurations, the Nginx config gets updated again and does a graceful reload of the configuration.

If you connect to the Nginx ingress controller pod using exec and check the /etc/nginx/nginx.conf file, you can see all the rules specified in the ingress object applied in the conf file

Ingress & Ingress Controller Architecture

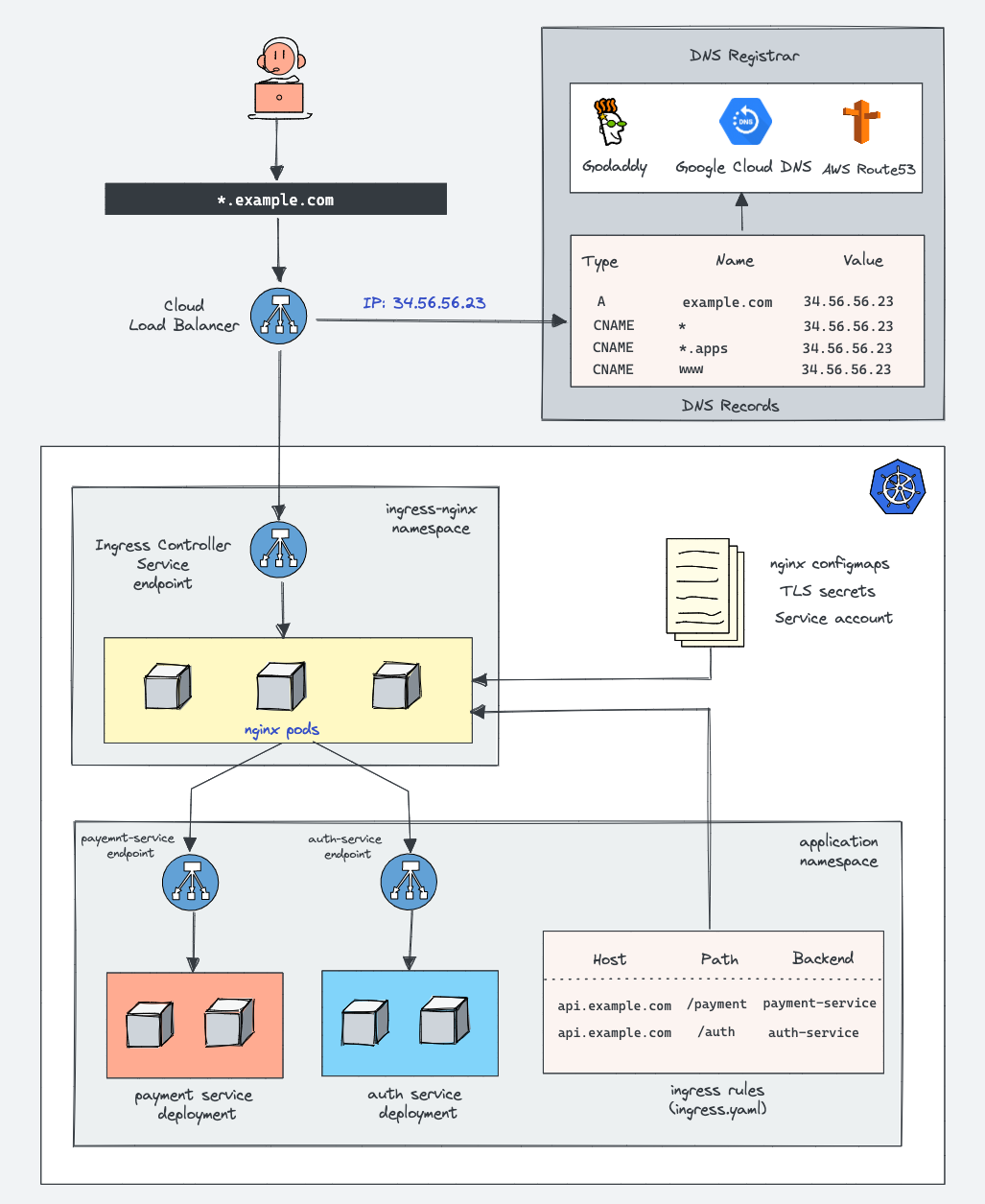

Here is the architecture diagram that explains the ingress & ingress controller setup on a kubernetes cluster.

It shows ingress rules routing traffic to two payment & auth applications

Now if you look at the architecture, it will make more sense and you will probably be able to understand how each ingress workflow works.

1. DNS and Load Balancer

When a user visits api.example.com, the DNS resolves this domain to a public IP address of the cloud load balancer. This load balancer is the main entry point that forwards traffic into the Kubernetes cluster.

2. Ingress Controller Service

The load balancer sends incoming requests to the Ingress Controller Service, which exposes the Ingress Controller pods (usually NGINX). This service ensures that external traffic can reach the controller inside the cluster.

3. Ingress Controller (NGINX)

The Ingress Controller acts as a smart router. It reads the Ingress rules, ConfigMaps, and TLS secrets from the cluster and configures NGINX dynamically to route requests based on hostnames and paths.

4. Ingress Rules

Ingress rules define how traffic should flow.

For example:

api.example.com/payment → payment-service

api.example.com/auth → auth-service

These rules tell the controller which backend service should handle each request.

5. Backend Services

Each backend (like payment-service and auth-service) has its own Kubernetes Service and Pods. The Service load-balances requests among its Pods, ensuring availability and scalability.

6. End-to-End Flow

The Ingress Controller acts as the intelligent gateway for all external traffic entering a Kubernetes cluster. It centralizes routing, security (via TLS and authentication), and scalability — allowing developers to manage traffic with simple, declarative YAML rules instead of manually configuring load balancers for every service.

Types of Kubernetes Ingress Controllers

Kubernetes doesn’t come with a built-in Ingress Controller, so you must install one that fits your environment and requirements. Different Ingress Controllers are built on various reverse proxy or load-balancing technologies, and each offers its own features, flexibility, and performance advantages.

1. NGINX Ingress Controller

The NGINX Ingress Controller is the most commonly used and widely supported option. It is based on the NGINX web server and acts as a reverse proxy that manages HTTP and HTTPS traffic.

There are two main versions:

Community NGINX Ingress Controller (also known as ingress-nginx) — maintained by the Kubernetes community.

NGINX Plus Ingress Controller — a commercial version provided by F5 with added enterprise features like advanced load balancing, dynamic reconfiguration, and built-in monitoring.

NGINX controllers are known for being lightweight, fast, and highly configurable through annotations, ConfigMaps, and custom templates. They are ideal for most general-purpose Kubernetes clusters.

2. HAProxy Ingress Controller

The HAProxy Ingress Controller uses the HAProxy load balancer, known for its high performance and reliability. It offers very efficient traffic handling, advanced health checks, connection pooling, rate limiting, and SSL termination.

HAProxy supports dynamic configuration updates, which means routing rules can change without restarting the controller. It’s often used in high-traffic production environments where stability and low latency are critical.

3. Traefik Ingress Controller

Traefik is a modern, cloud-native edge router and load balancer designed for microservices and dynamic environments. The Traefik Ingress Controller is easy to configure and automatically discovers services and routes in real-time.

It provides built-in support for Let’s Encrypt SSL certificates, metrics, dashboards, and middleware for authentication and rate limiting.

Traefik is a good choice for developers who want a simple, flexible, and modern controller with automatic certificate management and easy integration into CI/CD pipelines.

4. AWS ALB Ingress Controller

The AWS Application Load Balancer (ALB) Ingress Controller is designed specifically for Amazon EKS clusters. Instead of running an internal proxy like NGINX, this controller directly configures AWS’s own Application Load Balancer to manage external traffic.

It provides deep integration with AWS services like IAM, Route53, and WAF (Web Application Firewall).

This approach offloads the routing work to AWS infrastructure, improving scalability and reducing cluster overhead.

5. GCE Ingress Controller

The GCE (Google Cloud Engine) Ingress Controller is the default option in Google Kubernetes Engine (GKE). It integrates tightly with Google Cloud’s HTTP(S) Load Balancer.

When you create an Ingress resource in GKE, the controller automatically provisions and configures a Google Cloud Load Balancer with SSL certificates, backend services, and health checks.

This makes it a good choice for teams using GKE who want minimal manual configuration and native cloud integration.

6. Kong Ingress Controller

Kong is an API gateway built on NGINX and OpenResty. The Kong Ingress Controller combines Ingress management with full API gateway features like authentication, rate limiting, transformations, and analytics.

It’s ideal for organizations exposing microservices as APIs because it provides both traffic routing and API management in one tool.

7. Istio Ingress Gateway

When using a service mesh like Istio, the Istio Ingress Gateway acts as the entry point for all external traffic into the mesh.

Unlike standard Ingress controllers, it uses Envoy proxies and supports advanced traffic management features — such as canary deployments, traffic splitting, retries, fault injection, and mutual TLS.

It’s a powerful option for complex service mesh environments where fine-grained control over traffic is required.

When to Use an Ingress Controller

An Ingress Controller is most useful when you need to manage external access to multiple services within a Kubernetes cluster efficiently. If your application exposes multiple HTTP or HTTPS endpoints, an Ingress Controller allows you to consolidate traffic through a single entry point instead of creating multiple LoadBalancer or NodePort services. It’s also essential when you need features like host-based or path-based routing, SSL/TLS termination, authentication, or traffic rewriting. For smaller clusters or internal-only services, a Service of type ClusterIP or NodePort may be sufficient, but as your application scales and traffic management becomes complex, using an Ingress Controller simplifies operations, improves security, and centralizes control over external access.

difference between an Ingress Controller and a Service (Svc) in Kubernetes:

| Feature | Ingress Controller | Service (Svc) |

|---|---|---|

| Definition | A component that implements Ingress rules to manage external access to cluster services. | An abstraction that defines a logical set of pods and a policy to access them. |

| Purpose | Routes external HTTP/HTTPS traffic into the cluster. | Exposes a set of pods internally or externally and enables pod-to-pod communication. |

| Traffic Type | Primarily HTTP and HTTPS. | Can be TCP, UDP, or HTTP (depending on type). |

| External Access | Can expose multiple services via a single external endpoint using routing rules. | Exposes a service via ClusterIP, NodePort, or LoadBalancer (one service per external endpoint). |

| Routing Logic | Supports host-based and path-based routing. | No path/host routing; traffic goes directly to the service. |

| TLS/SSL Support | Can handle SSL termination for HTTPS. | No built-in SSL termination; requires external handling. |

| Complexity | More complex; needs an Ingress Controller implementation (e.g., NGINX, Traefik). | Simple; built-in Kubernetes object. |

| Load Balancing | Provides load balancing between service endpoints (pods). | Provides basic load balancing across pods of the service. |

| Annotations / Advanced Features | Supports rate-limiting, authentication, redirects, rewrites, etc. | Minimal advanced features; mostly just service type and port mapping. |

| Use Case | Ideal for managing multiple services behind a single domain and handling HTTP routing. | Ideal for internal communication between pods or exposing a single service externally. |

Best Practices for Ingress Controller

1. Use a Well-Supported Ingress Controller

Choosing a stable and widely adopted Ingress Controller like NGINX, Traefik, or HAProxy ensures long-term support and community guidance. Well-supported controllers receive frequent updates, bug fixes, and security patches, reducing risks of vulnerabilities. Additionally, they often provide comprehensive documentation, making configuration and troubleshooting easier for both beginners and advanced users.

2. Enable TLS/SSL for Secure Traffic

Always configure TLS/SSL termination at the Ingress Controller level to encrypt traffic between clients and your cluster. This not only protects sensitive data but also improves compliance with security standards. Using tools like Let’s Encrypt for automatic certificate management simplifies maintenance and ensures certificates are always valid without manual intervention.

3. Use Path and Host-Based Routing Efficiently

Ingress Controllers support both path-based and host-based routing. Structure your routes logically to prevent conflicts and simplify maintenance. For example, separating services by subdomain (api.example.com, web.example.com) or by URL path (/api, /web) makes the traffic flow clearer and reduces potential routing errors as your application scales.

4. Apply Rate Limiting and Security Policies

Leverage the advanced features of your Ingress Controller to protect services from abuse. Rate limiting, IP whitelisting, and authentication policies can prevent DDoS attacks and unauthorized access. Implementing these controls at the Ingress layer reduces load on backend services while maintaining centralized security management.

5. Monitor and Log Traffic

Enable logging and monitoring on your Ingress Controller to track traffic patterns, detect anomalies, and troubleshoot errors. Collect metrics like request count, latency, and error rates to optimize performance. Integrating with monitoring tools such as Prometheus and Grafana allows proactive issue detection and helps in capacity planning.

6. Keep Configurations Declarative and Version-Controlled

Always define your Ingress resources in YAML manifests stored in version control systems. This ensures changes are auditable, reproducible, and easy to roll back if needed. Declarative configuration also aligns with GitOps practices, making deployments more predictable and reducing the risk of human errors.

7. Limit Ingress Rules per Controller

While a single Ingress Controller can handle multiple services, overloading it with too many rules can degrade performance. Consider splitting traffic across multiple Ingress Controllers for high-traffic environments or critical applications to ensure stability and maintainability.

Conclusion

Kubernetes Ingress and Ingress Controllers provide a powerful, flexible way to manage external access to services within a cluster. By centralizing traffic routing, SSL/TLS termination, and advanced features like host-based or path-based routing, Ingress Controllers simplify the complexity of exposing multiple services while improving security and scalability. Choosing the right controller, following best practices, and leveraging declarative configuration ensures a robust and maintainable architecture. Whether you are running a small cluster or a large-scale microservices environment, implementing an Ingress Controller helps streamline traffic management, reduce operational overhead, and provide a seamless experience for both developers and end users.