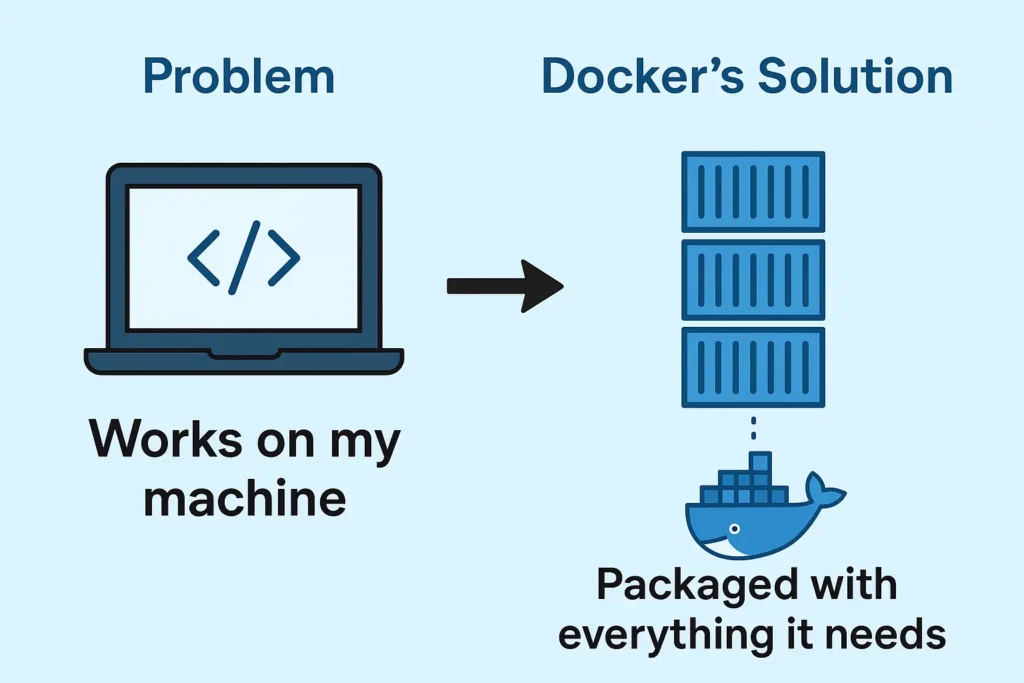

In today’s fast-paced software world, consistency and speed are crucial. Docker has changed the way developers build, test, and deploy applications by allowing them to package apps and all their dependencies into lightweight, portable containers. Whether you’re running your app on a laptop, server, or in the cloud, Docker ensures it behaves the same everywhere, eliminating the classic “works on my machine” problem. This guide will walk you through the basics of Docker, its advantages, and how to get started on Ubuntu.

What is Docker

Docker is a tool that lets you package an application and all its dependencies (like libraries, settings, and environment) into a container. A container is like a lightweight, portable box that can run your app anywhere—your laptop, a server, or the cloud—without worrying about differences in the environment.

Key points in simple words:

- Lightweight and fast – Unlike traditional virtual machines that need a whole OS, Docker containers share the host computer’s OS. This makes them smaller and faster to start.

- Portable – The same container will run the same way on any machine. This solves the “works on my machine” problem.

- Isolated – Each container runs separately, so one app won’t interfere with another.

- Cross-platform – Docker works on Linux, Windows, and macOS.

- OS-level virtualization – Instead of emulating hardware like VMware, Docker only isolates apps at the OS level, making it efficient.

Before Docker

Before Docker, deploying applications across different environments was often cumbersome and error-prone. Each environment—development, testing, staging, production—might have different operating systems, libraries, or software versions. This led to the classic “works on my machine, but not on yours” problem.

Key challenges included:

- Dependency conflicts: Applications required specific library versions that might not exist or conflict on other machines.

- Configuration drift: Server configurations varied, causing unexpected behavior when moving applications between environments.

- Heavy resource usage: Traditional virtual machines were used to isolate apps, but each VM included a full OS, consuming more CPU, memory, and storage.

- Slow setup: Spinning up environments required manual installation of OS, dependencies, and app setup, which was time-consuming.

- Limited scalability: Scaling applications horizontally was more complex due to the heavy and inconsistent environment setups.

After Docker

Docker solved these problems by packaging applications and all their dependencies into containers—lightweight, portable, and consistent execution units.

Key improvements include:

Efficiency: Containers start in seconds, use fewer system resources, and simplify application deployment.

Portability: Containers run consistently across any environment—developer laptops, on-prem servers, or cloud platforms.

Consistency: Same behavior in development, testing, and production, eliminating “works on my machine” issues.

Lightweight: Containers share the host OS kernel, so they are much smaller and faster than full VMs.

Scalability: Ideal for microservices architecture; works seamlessly with orchestration tools like Kubernetes and Docker Swarm.

Docker Architecture

When you first start using Docker, you may treat it as a box that “just works.” While that’s fine for getting started, a deeper understanding of Docker’s architecture will help you troubleshoot issues, optimize performance, and make informed decisions about your containerization strategy.

Docker’s architecture is designed to ensure efficiency, flexibility, and scalability. It’s composed of several components that work together to create, manage, and run containers. Let’s take a closer look at each of these components.

Docker Architecture: Key Components

Docker’s architecture is built around a client-server model that includes the following components

1.Docker Client

2.Docker Daemon (dockerd)

3.Docker Engine

4.Docker Images

5.Docker Containers

6.Docker Registries

1. Docker Client

The Docker Client is the primary way users interact with Docker. It’s a command-line tool that sends instructions to the Docker Daemon (which we’ll cover next) using REST APIs. Commands like docker build, docker pull, and docker run are executed from the Docker Client.

When you type a command like docker run nginx, the Docker Client translates that into a request that the Docker Daemon can understand and act upon. Essentially, the Docker Client acts as a front-end for interacting with Docker’s more complex backend components.

2. Docker Daemon (dockerd)

The Docker Daemon, also known as dockerd, is the brain of the entire Docker operation. It’s a background process that listens for requests from the Docker Client and manages Docker objects like containers, images, networks, and volumes.

Here’s what the Docker Daemon is responsible for

- Building and running containers: When the client sends a command to run a container, the daemon pulls the image, creates the container, and starts it.

- Managing Docker resources: The daemon handles tasks like network configurations and volume management.

- The Docker Daemon runs on the host machine and communicates with the Docker Client using a REST API, Unix sockets, or a network interface. It’s also responsible for interacting with container runtimes, which handle the actual execution of containers.

3. Docker Engine

The Docker Engine is the core part of Docker. It’s what makes the entire platform work, combining the client, daemon, and container runtime. Docker Engine can run on various operating systems, including Linux, Windows, and macOS.

There are two versions of the Docker Engine

- Docker CE (Community Edition): This is the free, open-source version of Docker that’s widely used for personal and smaller-scale projects.

- Docker EE (Enterprise Edition): The paid, enterprise-level version of Docker comes with additional features like enhanced security, support, and certification.

The Docker Engine simplifies the complexities of container orchestration by integrating the various components required to build, run, and manage containers.

4. Docker Images

A Docker Image is a read-only template that contains everything your application needs to run—code, libraries, dependencies, and configurations. Images are the building blocks of containers. When you run a container, you are essentially creating a writable layer on top of a Docker image.

Docker Images are typically built from Dockerfiles, which are text files that contain instructions on how to build the image. For example, a basic Dockerfile might start with a base image like nginx or ubuntu and include commands to copy files, install dependencies, or set environment variables.

Here’s a simple example of a Dockerfile

dockerfileCopy codeFROM nginx:latest

COPY ./html /usr/share/nginx/html

EXPOSE 80

In this example, we’re using the official Nginx image as the base and copying our local HTML files into the container’s web directory.

Once the image is built, it can be stored in a Docker Registry and shared with others.

5. Docker Containers

A Docker Container is a running instance of a Docker Image. It’s lightweight and isolated from other containers, yet it shares the kernel of the host operating system. Each container has its own file system, memory, CPU allocation, and network settings, which makes it portable and reproducible.

Containers can be created, started, stopped, and destroyed, and they can even be persisted between reboots. Because containers are based on images, they ensure that applications will behave the same way no matter where they’re run.

A few key characteristics of Docker containers:

- Isolation: Containers are isolated from each other and the host, but they still share the same OS kernel.

- Portability: Containers can run anywhere, whether on your local machine, a virtual machine, or a cloud provider.

6. Docker Registries

A Docker Registry is a centralized place where Docker Images are stored and distributed. The most popular registry is Docker Hub, which hosts millions of publicly available images. Organizations can also set up private registries to store and distribute their own images securely.

Docker Registries provide several key features:

- Image Versioning: Images are versioned using tags, making it easy to manage different versions of an application.

- Access Control: Registries can be public or private, with role-based access control to manage who can pull or push images.

- Distribution: Images can be pulled from a registry and deployed anywhere, making it easy to share and reuse containerized applications.

How to Install Docker on Ubuntu

Docker is a powerful platform for developing, shipping, and running applications inside containers. Installing Docker on Ubuntu is straightforward if you follow these steps.

Step 1: Update Your System

Before installing Docker, it’s a good practice to update your package list and install dependencies.

sudo apt update

sudo apt upgrade -y

sudo apt install apt-transport-https ca-certificates curl software-properties-common -yStep 2: Add Docker’s Official GPG Key

Docker packages are signed with a GPG key to ensure authenticity. Add it using the following command:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpgStep 3: Add Docker Repository

Add the Docker APT repository to your system to install the latest Docker version:

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/nullStep 4: Update Packages Again

Update the package list to include the Docker repository:

sudo apt updateStep 5: Install Docker Engine

Now, install Docker with:

sudo apt install docker-ce docker-ce-cli containerd.io -yStep 6: Start and Enable Docker

Start Docker and enable it to run at system startup:

sudo systemctl start docker

sudo systemctl enable dockerStep 7: Verify Docker Installation

Check if Docker is installed and running correctly:

docker --versionYou should see something like: Docker version 24.0.1, build abc1234

Also, check the Docker service status:

sudo systemctl status dockerAdvantages of Docker

1. Portability

One of the most outstanding advantages of Docker is its portability. Docker containers encapsulate applications and their dependencies, making it easy to migrate applications between different environments seamlessly. No matter whether you are working on your local workstation or on a production server in the cloud, the Docker container will run the same way, ensuring consistency across all steps of the application lifecycle.

2. Isolation

Docker containers offer a high level of isolation between applications and their dependencies. Each container runs independently, which means that it will not interfere with other containers on the same machine or server. This is crucial to ensure the security and stability of applications in shared environments.

3. Resource Efficiency

Docker uses fewer resources compared to traditional virtual machines. Because containers share the same underlying operating system kernel, they are much lighter and require less disk space and RAM. This allows more applications to run on a single machine, which saves hardware costs and eases resource management.

4. Scalability

Docker facilitates application scalability. You can create multiple instances of a container and distribute the workload efficiently using container orchestration tools such as Kubernetes or Docker Swarm. This allows you to quickly adapt the capacity of your application according to demand, which is essential in high-load environments.

5. Faster development and deployment

Docker simplifies the application development and deployment process. Containers allow developers to work in local environments identical to production environments, which reduces compatibility issues and speeds up the development cycle. In addition, the deployment process becomes simpler and more automatable, saving time and reducing errors.

Conclusion

Docker has revolutionized the way applications are developed, tested, and deployed. By providing lightweight, portable, and isolated containers, it eliminates the “works on my machine” problem and ensures consistent behavior across environments. Its efficiency, scalability, and resource optimization make it an indispensable tool for modern software development. Whether you are a beginner or a professional, learning Docker opens doors to faster development, simplified deployments, and seamless collaboration between development and operations teams. Starting with Docker on Ubuntu is simple, and once set up, you can explore advanced features like Docker Compose, orchestration with Kubernetes, and building microservices architectures.