In today’s world, software needs to be fast, easy to move, and work the same everywhere. Docker Containers have made this possible. They help developers and IT teams put an application and everything it needs (like libraries and settings) into one small package.

This package can run the same way on any computer — whether it’s your laptop, a server, or the cloud.

But what is a container?

How is it different from a normal virtual machine (VM)?

And why is it so important in modern software development?

What Is a Container?

A container is a small, lightweight, and independent unit that includes everything needed to run an application smoothly. It contains the application code, the libraries, the system tools, and the configuration files that the application requires. In simple words, a container is like a lunchbox for your application — it carries all the ingredients your app needs to run, so it doesn’t have to depend on what’s already available on your computer. Because of this, containers make it possible for applications to run the same way everywhere — on your laptop, on another developer’s system, or on a cloud server.

Each container runs as a separate process on the same computer, but it stays isolated from other containers. This means even though multiple containers might be running on the same system, they don’t interfere with each other. Each one has its own space to work — its own files, libraries, and settings — almost like it’s running on its own little computer inside the bigger one. This isolation gives containers security, stability, and consistency while using very few resources. It’s what makes containers so powerful and efficient for modern software development.

How Containers Work?

Imagine your computer as a big building and containers as small apartments inside that building.

Each apartment has its own kitchen, bathroom, and furniture (these are like the files, programs, and tools your app needs).

Even though all apartments are inside the same building and share things like electricity and water (the main computer’s system), they don’t mix up each other’s stuff.

This is how containers work — many separate spaces running on one computer, each safely doing its own job.

Now, let’s understand how this happens behind the scenes.

Containers use some special features of the operating system to stay isolated, safe, and fast.

1. Namespaces – Giving Each Container Its Own World

Think of namespaces as invisible walls between containers.

They make sure that every container has its own little world inside the same computer.

For example:

- Each container gets its own process list — so it only sees its own running programs.

- It has its own network — like its own internet connection.

- It has its own file system — like its own folder where it keeps data.

Because of namespaces, one container cannot see or touch another container’s data or applications.

It’s just like living in separate apartments — you can’t enter your neighbor’s home without permission.

2. Control Groups (cgroups) – Sharing Resources Fairly

Your computer’s CPU and memory are like the electricity and water in the building.

If one apartment (container) uses too much, others might not get enough.

To prevent this, the system uses control groups, or cgroups for short.

Cgroups make sure each container gets only its fair share of system resources.

For example:

- You can set one container to use only 20% of the CPU.

- Another container can be limited to 512 MB of memory.

This way, if one container suddenly needs a lot of power, it won’t slow down the others.

It keeps everything balanced and running smoothly.

3. Union File System – Layered Storage

The Union File System (UnionFS) is like a smart way of stacking layers of files.

Imagine you’re making a burger

- The bottom bun is your base system layer.

- Then you add a layer for libraries, a layer for application code, and maybe one more for configuration.

Each layer sits on top of the previous one — and when you make a change, the system just adds a new layer instead of rewriting everything.

This makes containers:

- Fast to start, because they reuse existing layers.

- Lightweight, because they only add what’s new.

For example, if ten containers use the same base system, that layer is shared — it’s not copied ten times.

This saves both time and storage space.

Key Concepts of Docker Containers

Here are the key concepts and principles that work behind Docker Containers.

Containerization

Essentially, Containers function based on the concept of containerization, which is packing an application together with all of its dependencies into a single package. This package, referred to as a container image, includes all of the necessary runtime environments, libraries, and other components needed to run the application.

Isolation

Operating system-level virtualization is used by Docker containers to offer application isolation. With its filesystem, network interface, and process space, each container operates independently of the host system as a separate process.

By maintaining their independence from one another, containers are kept from interfering with one another’s operations thanks to this isolation.

Docker Engine

The Docker Engine is the brains behind Docker containers; it builds, launches, and maintains them. The Docker daemon, which operates in the background, and the Docker client, which lets users communicate with the Docker daemon via commands, are two of the parts that make up the Docker Engine.

Image and Container Lifecycle

The creation of a container image is the first step in the lifecycle of a Docker container. A Dockerfile, which outlines the application’s dependencies and configuration, is used to build this image.

The image can be used to instantiate containers, which are instances of the image that are running after it has been created. It is possible to start, stop, pause, and restart containers as one.

Resource Management

Docker containers provide effective resource management because of their shared kernel architecture and lightweight design. Since containers share the operating system kernel of the host system, overhead is decreased and startup times are accelerated.

To ensure maximum performance and scalability, Docker also offers tools for resource usage monitoring and control.

Portability

One of the main benefits of Docker containers is their portability. Container images are self-contained units that are easily deployable and distributed throughout various environments, ranging from production to testing and development.

This portability streamlines the deployment process and lowers the possibility of compatibility problems by enabling “build once, run anywhere”.

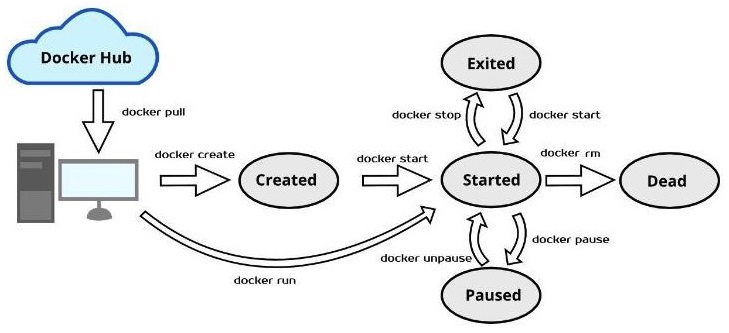

Docker Container Lifecycle

There are five essential phases in the Docker container lifecycle: created, started, paused, exited, and dead. The lifecycle of a container is represented by its stages, which range from creation and execution to termination and possible recovery.

Comprehending these phases is crucial for proficiently overseeing Docker containers and guaranteeing their appropriate operation in a containerized setting.

Let’s explore the stages of the Docker container lifecycle:

The Created State

The “created” state is the first stage. When a container is created with the docker create command or a comparable API call, it reaches this phase. The container is not yet running when it is in the “created” state, but it does exist as a static entity with all of its configuration settings defined.

At this point, Docker reserves the storage volumes and network interfaces that the container needs, but the processes inside the container have not yet begun.

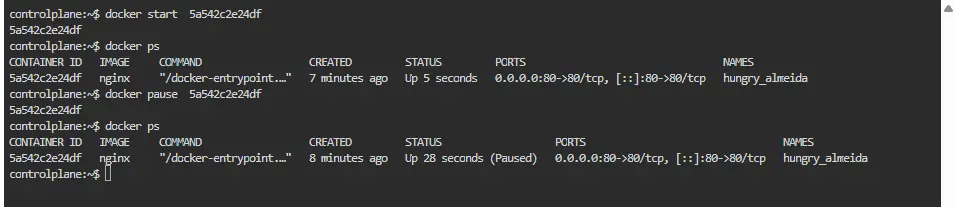

The Started State

The “started” or “running” state is the next stage of the lifecycle. When a container is started with the docker start command or an equivalent API call, it enters this stage.

When a container is in the “started” state, its processes are launched and it starts running the service or application that is specified in its image. While they carry out their assigned tasks, containers in this state actively use CPU, memory, and other system resources.

The Paused State

Throughout their lifecycle, containers may also go into a “paused” state. When a container is paused with the docker pause command, its processes are suspended, thereby stopping its execution.

A container that is paused keeps its resource allotments and configuration settings but is not in use. This state helps with resource conservation and debugging by momentarily stopping container execution without completely stopping it.

The Exited State

A container in the “exited” state has finished executing and has left its primary process. Containers can enter this state when they finish the tasks they are intended to complete or when they run into errors that force them to terminate.

A container that has been “exited” stays stopped, keeping its resources and configuration settings but ceasing to run any processes. In this condition, containers can be completely deleted with the docker rm command or restarted with the docker start command.

The Dead State

A container that is in the “dead” state has either experienced an irreversible error or been abruptly terminated. Critical errors in the containerized application, problems with the host system underneath, or manual intervention can all cause containers to enter this state.

When a container is in the “dead” state, it is not in use and the Docker daemon usually releases or reclaims its resources. To free up system resources, containers in this state need to be deleted using the docker rm command since they cannot be restarted.

Important Docker Container Commands

Now that you have understood the basics of Docker Containers and how they work, lets look at the most important Docker Container commands with the help of examples.

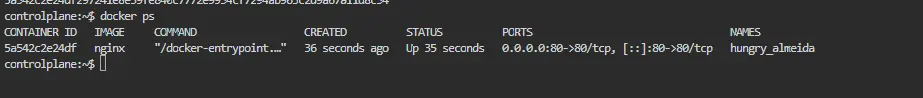

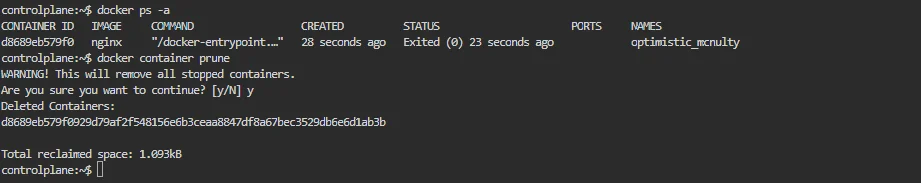

Listing all Docker Containers

The Docker host’s running containers can be listed using the docker ps command. You can use the -a or –all flag to show all containers, including stopped ones, as it only shows running containers by default.

$ docker ps

This command displays the IDs, names, statuses, and other pertinent details of all containers that are currently running. It returns an empty list if no containers are in use.

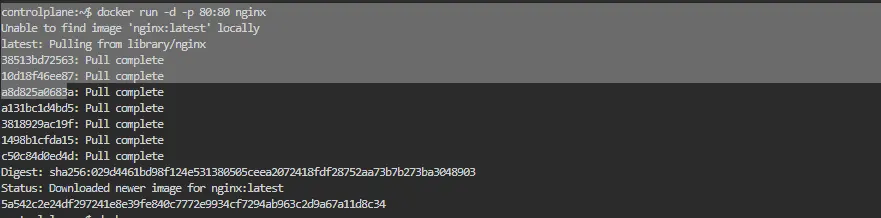

Running a Docker Container

The primary command for starting and creating Docker containers is docker run. If the image isn’t already available locally, Docker pulls it from a registry when you run this command. It then starts a fresh container instance by generating one based on that image.

With the help of this command, you can specify several options, including volume mounts, environment variables, port mappings, and more, to tailor the container’s configuration to your requirements.

$ docker run -d -p 80:80 nginx

In this case, the detached mode (-d) of the docker run creates a new container based on the “nginx” image and runs it in the background. Additionally, it maps host port 8080 to container port 80 (-p 8080:80), granting access to the NGINX web server housed within the container.

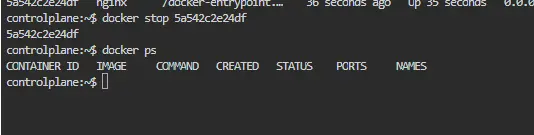

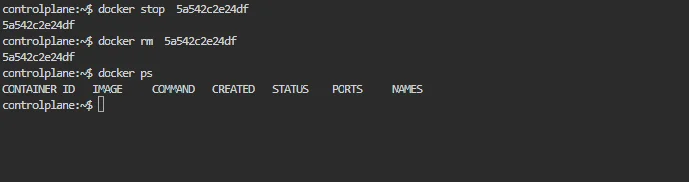

Stopping a Docker Container

A container can be gracefully stopped by using the docker stop command, which signals the container’s main process with a SIGTERM. This enables the container to finish any cleanup operations – such as saving state or cutting off network connections before shutting down.

$ docker stop my_container

This command stops the “my_container” container that is currently operating. Docker waits for the container to gracefully end its life for a configurable duration (10 seconds by default). Docker will automatically terminate the container with a SIGKILL signal if it does not stop within this time limit.

Pausing a Running Container

A running container’s processes can be momentarily suspended, or its execution paused, with the docker pause command. This can be helpful for temporarily freeing up system resources, debugging, and troubleshooting problems.

$ docker pause my_container

This command stops the container “my_container” from running. The container uses no CPU or memory when it is paused because its processes are frozen. The container does, however, keep its resource allocation and configuration settings.

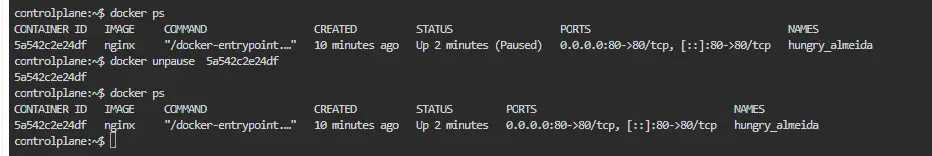

Resuming a Docker Container

When a container is paused, its processes can be carried out again by using the docker unpause command. By using this command, the container returns to its initial state and undoes the effects of the docker pause command.

$ docker unpause my_container

The above command resumes the paused container “my_container’s” execution and permits its processes to carry on as usual.

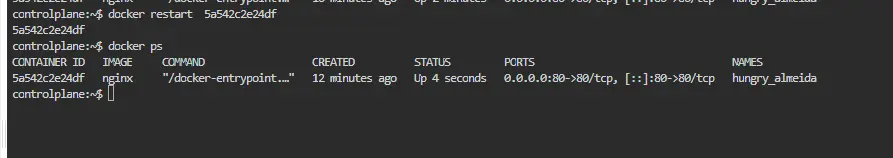

Restarting a Container

One easy way to quickly stop and restart an operating container is with the docker restart command. It is frequently used to force a container to reinitialize after experiencing problems or to apply changes to the configuration of a running container.

$ docker restart my_container

This command pauses and then resumes the execution of the container with the name “my_container.”. The processes inside the container are stopped and then restarted upon restarting, enabling any modifications to take effect.

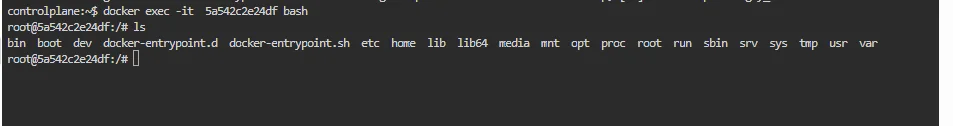

Executing Commands in a Running Docker Container

To run a command inside an already-running container, use the docker exec command. It enables users to run arbitrary commands, like starting a shell session or carrying out a particular program, inside the environment of a container.

$ docker exec-it my_container bash

This command opens the “my_container” container that is currently running in an interactive shell session (bash). In order to enable interactive input/output, the -it flags allocate a pseudo-TTY and maintain STDIN open even when it is not attached.

Removing a Docker Container

To remove a Docker container or containers, you can use the docker rm command. The container(s) whose ID or name you wish to remove can be specified. This command only removes stopped containers by default; to forcefully remove running containers, you can use the -f or –force flag.

$ docker rm my_container

The above command deletes the container with the name “my_container.”. Unless the -f flag is used to force removal, the container must stop running before being removed.

To clear up disk space on the Docker host, you can use the docker container prune command to remove all stopped containers. It is a practical method of clearing out empty containers and recovering resources.

$ docker container prune

Comparison Between Docker Image and Container

| Aspect | Docker Image | Docker Container |

|---|---|---|

| Definition | A read-only blueprint that contains everything needed to run an application (code, libraries, dependencies, environment variables, etc.) | A runtime instance of a Docker image — the actual environment where the application runs. |

| Nature | Static and immutable. | Dynamic and changeable while running. |

| State | Inactive (does not run by itself). | Active (runs as a process). |

| Mutability | Read-only — cannot be changed once built. | Writable — has a writable layer on top of the image. |

| Lifecycle | Built once and stored in registry or cache. | Created, started, stopped, paused, and deleted. |

| Creation Command | Created using docker build (from Dockerfile). | Created using docker run (from an image). |

| Storage Location | Stored in Docker registry (local or remote like Docker Hub). | Stored temporarily on the Docker host. |

| Persistence | Permanent until manually removed. | Temporary — deleted when stopped (unless volumes are used). |

| Versioning | Can be tagged and versioned (e.g., myapp:v1). | Identified by unique Container ID or name. |

| Reusability | Can be reused to create multiple containers. | Created for a specific run; reusable only via new instances. |

| Resource Usage | Consumes disk space only (no CPU or memory). | Consumes CPU, memory, and storage while running. |

| Isolation | No isolation — it’s just a file template. | Provides process-level isolation using namespaces and cgroups. |

| Modification | Cannot modify directly; must rebuild. | Can be modified temporarily (changes lost if not committed). |

| Commit Option | Base for containers. | Can be committed back into a new image using docker commit. |

| Sharing | Shared via registries like Docker Hub, ECR, or private registries. | Not typically shared; used locally on the host. |

| Analogy | Like a class in OOP (template). | Like an object/instance created from that class. |

| Example Command | docker build -t myapp:v1 . | docker run -d --name web myapp:v1 |

| Purpose | Packaging and distribution of applications. | Execution and testing of applications in isolated environments. |

Use Cases of Containers

Containers are widely used in modern software development because they make applications fast, portable, and consistent. Here are some of the main use cases:

1. Application Development and Testing

Developers use containers to create a consistent environment for building and testing applications. Instead of worrying about whether the app will work on another system, they can package the app with all its dependencies in a container. This ensures that the app behaves the same way on a developer’s laptop, a test server, or a production environment.

2. Microservices Architecture

Many companies break large applications into smaller, independent pieces called microservices. Each microservice can run in its own container, isolated from the others. This makes it easier to develop, update, and scale parts of an application independently without affecting the whole system.

3. Continuous Integration and Continuous Deployment (CI/CD)

Containers are ideal for CI/CD pipelines. Developers can package code into containers and automatically deploy them to different environments. This speeds up development and reduces errors because the container ensures the app runs the same way everywhere.

4. Cloud Deployment

Containers are perfect for cloud computing because they are lightweight and portable. Cloud providers can run multiple containers on the same server efficiently, reducing costs. Developers can also move containers easily between different cloud platforms without worrying about compatibility issues.

5. Isolation and Security

Containers isolate applications from the host system and other containers. This improves security because if one container has a problem or is compromised, it doesn’t affect others. It also allows multiple applications to run safely on the same machine.

6. Scalability

Containers make it easy to scale applications up or down. For example, if a website gets more visitors, you can quickly start more containers to handle the traffic. When traffic goes down, you can stop containers to save resources.

7. Legacy Application Modernization

Companies can package old or legacy applications into containers to make them easier to manage, run, and move to modern cloud environments. This avoids the need to completely rewrite the old software.

Conclusion

To sum up, Docker containers have completely changed how modern software development builds, deploys, and manages applications. Docker containers are lightweight and portable environments that offer many advantages, such as consistency, repeatability, resource efficiency, scalability, and portability, for packaging and running applications.

Using a range of Docker commands and tools, developers can easily create, deploy, and manage containers, facilitating more efficient development workflows and enhanced team collaboration. Docker containers are positioned to stay a key component of the software development ecosystem as containerization gains traction, helping businesses to innovate more quickly and provide value to clients more effectively.